The content below is taken from the original ( A look back at 10 years of CES), to continue reading please visit the site. Remember to respect the Author & Copyright.

So, here we are on the eve of CES 2020 — the supersized buffet of an annual consumer electronics show in Las Vegas, where we not only get a sneak peek of what to expect from tech companies this year, but also to take the pulse of how people are responding to what’s out there.

CES is all about “the future,” and we’ll be here this week covering all the big stories and themes. But what about the past? In the spirit of 2020 hindsight, here are some of the most notable headlines and trends of last 10 years of CES.

Take a look and let us know what you think have been the biggest themes coming out of the event in the comments below.

CES 2010

Palm’s ‘turnaround’. The mobile upstart launched its first smartphones, the Pre and Pixel, in 2009, and found a hardcore group of fans that loved the look of the devices, and all the features that set it apart from Android and iOS. 2010 was about gaining momentum. Palm CEO Jon Rubenstein (one of the early pioneers at Apple making the iPod) made some waves onstage by claiming that he had never used an iPhone. Bullish talk that helped cement the company’s independent image. Palm also saw its stock rise by 10% after it announced at a press conference that it would sell its devices through a lucrative deal with Verizon Wireless (which now owns TechCrunch). (All proved to be short-lived, and many lamented that Palm was probably too ahead of its time.)

Meanwhile, the battle between iOS and Android raged on. In 2010 the story was about how AT&T was finally adding its first Android-based smartphones to its lineup (remember that it was the exclusive carrier of the iPhone as its first foray into the new generation of touchscreen smartphones). Motorola “Backflip” smartphones, and tablets and smartbooks from Dell, were among the other mobile devices announced that year.

‘Natural User Interface.’ Here’s a prescient article by then-CEO of Microsoft Steve Ballmer written on the heels of CES about the new ways we will be interacting with devices in the way ahead, covering touch, gesture and voice, and very dependent on the cloud. He gave a specific nod to Project Natal, which Microsoft showed off at CES and eventually became Microsoft’s Kinect gesture technology. Ballmer was absolutely on the money, although I’d be interested to know if he suspected just how big of a role his neighbor Amazon would play in that new era.

Other themes in the year included the usual boost in TV technology, this time around 3D; and some early signals of a smart car future with news from the likes of Nvidia and Ford.

CES 2011

RealNetworks and cloud-based music. This was absolutely the direction music would be going. RealNetworks would not ultimately be the top dog in this game, but back in 2011 it was the one leading the charge with a service called Unifi, lauded at the time for being first to market before Apple and Google — and Spotify — in presenting a way to merge what you buy online with what you might already own in terms of digital files. Alas, being an early mover does not always pay off, a theme of its own. Real Unifi quietly died a death and its URL is particularly iffy now.

4G. 2011 was also the year of LTE and 4G announcements from many carriers, from T-Mobile announcing sales of 900,000 4G handsets, to HTC unveiling its first 4G device.

The year of many phones (and specifically phone brands) that did not stand the test of time. Sister site Engadget (a monster when it comes to comprehensive CES coverage) highlighted its list of the best tablets and smartphones, a selection including Motorola, Notion Ink, BlackBerry, and Vizio — a veritable graveyard of brands. It was also the year of Android tablets set up for the future using Honeycomb… another dud, as it turned out.

Vroom vroom. We might take for granted that cars are a major component of CES today — after all, they are essentially very large, mobile pieces of hardware — but that wasn’t always the case (not least because the mammoth Detroit Auto Show is just around the corner in the convention calendar). In 2011, Ford unveiled its first foray into electric cars at the show, setting up a decade of major car advances getting launched at CES.

CES 2012

Remember when Nokia was the world’s biggest mobile handset maker? This was the year that it made some critical shifts in its downturn. CES 2012 was the event where the company unveiled its first Windows Phone-powered smartphone for the US market, to ship exclusively via AT&T. This proved to be a step along the road to Microsoft buying Nokia’s handset business outright, an ill-fated move that ultimately left both brands in a ditch as far as that market was concerned. But in 2012, there was still a lot of hope and enthusiasm for both.

More smart TV advances: Today we take integrated services and very light hardware like Fire TV sticks and Chrome Casts for granted. That was not the story in 2012, though, where a Samsung TV that integrated DirectTV without a box made headlines.

Steve Ballmer gave his last Microsoft keynote at CES (because Microsoft pulled out of the show keynote roster after that year) and announced a date for the Kinect (some two years after highlighting gesture as something that was going to be coming at us fast).

CES 2013

Hoppin’ mad! This was the year that big media took a bite out of streaming media. CNET, which had been running ‘best of’ awards on behalf of CES for years, was asked to remove the ‘best of show’ award that had been given to Dish’s Hopper with Sling (which let you watch programs recorded on your Dish DVR on your iPad) after the legal team of its parent, CBS, intervened. The interference was nefarious: CBS was embroiled in a lawsuit with Dish, tainting the whole business of then bestowing the award upon Razer. The whole thing was uncovered, criticized even by CNET itself. Then, Dish was awarded Best of Show some weeks later. As with so much else in the question about the best way to move content from one device to another, the answer was coming from somewhere else altogether: the cloud — that is to say, streaming services have trumped both the Dish Hopper and whatever else was competing against it.

Nvidia Shield. A big leap for Nvidia, the company that had already made its name with graphics and other processors used in high-performance computing devices and connected cars for gaming, artificial intelligence applications and more. 2013 was the year it revealed its own hardware in the form of a gaming device called Project Shield, powered by its newest processors. Shield has continued to grow over the years.

Oculus Rift. It was still going to be another year and a bit before Oculus got snapped up by Facebook for $2 billion, and the Rift virtual reality headset didn’t actually launch at CES, but this was a kind of coming-out party for it nonetheless, since it was the first time that the company had set up a stand to provide demos to any and all comers. The result was a lot of buzzy exposure (reported on by multiple outlets) to build up interest in the device. This in itself was a stage two media strategy: The company had launched on Kickstarter with a lot of fanfare prior to this — another notable trend for CES this year, where another crowdfunded blockbuster, the Pebble Smartwatch, exhibited for the first time, too.

CES 2014

Wearing thin… Wearable tech was everywhere at CES this year and definitely became a bigger theme as the year went on. Indeed Google did the wide launch of Google Glass as a consumer product just a few months later, although it took Apple until April 2015 to launch its first Watch. (That Watch, incidentally, has been one of the most successful of all wearable efforts…. There’s that late mover advantage again). Many voices were already crying foul: Just because you can build something to put on your wrist, or head, or finger, or on your jacket, or wherever… does that mean you necessarily should? And if you do, will anyone want to buy it, or will it collapse into history books as a novelty?

Honk honk. After a couple of fallow years where cars were more certainly present but not previewing blockbuster changes, 2014 was the year they changed gears. Google announced the Open Automotive Alliance, including partnerships with GM, Audi and Honda, Hyundai and Nvidia for Android-powered in-car systems.

Netflix and streaming. The streaming wars really didn’t kick off until the year after this, but for a little taster of how OTT services like Netflix’s would soon dominate the conversation about video at CES and elsewhere: this was the year that Netflix CEO Reed Hastings took to the stage to announce an exclusive 4K (high resolution) version of hit series House of Cards that would stream on 4K-capable LG TVs.

VR expansion. Oculus (still yet to be acquired by Facebook but easily emerging as a power player in the VR space) released a new version of its headset. Sony followed suit and others like Meta, working in the area of VR meeting augmented reality, also showed off their new kit. The momentum wouldn’t last: Meta and several more efforts are now defunct, others are struggling, and many are wondering what the mainstream market for VR and AR headsets will be longer term. (We’re still waiting to see whether Apple will launch a device here, too.)

CES 2015

http://bit.ly/2ZYALCE

Smart Home gets smarter. Google still hadn’t closed its deal to acquire Nest when CES 2015 rolled around, but the latter company’s momentum spoke a lot to why it got snapped up and integrated and continues to be a central part of the company’s home strategy. It announced a number of new partners at the show through its “works with Nest” program.

Meanwhile, Apple — long without an official presence at the show — continued to make itself a part of the conversation, specifically in the area of the connected home. The ecosystem for Apple’s HomeKit platform for managing connected smart devices by way of your iPhone grew by some way during CES with the announcement of a number of new partners.

Robots and spycams and drones, oh my! The rise of improvements in AI-based technology such as computer vision, voice recognition and more led to a wave of devices aimed at helping people see and do our bidding, and ultimately than they’d be able to on their own. This bigger trend expanded to creepy humanoids, as well as camera-equipped drones and security devices. The huge swing we’ve seen toward an awareness of our privacy in recent times was not so apparent in 2015, so I wonder how this theme would be handled today.

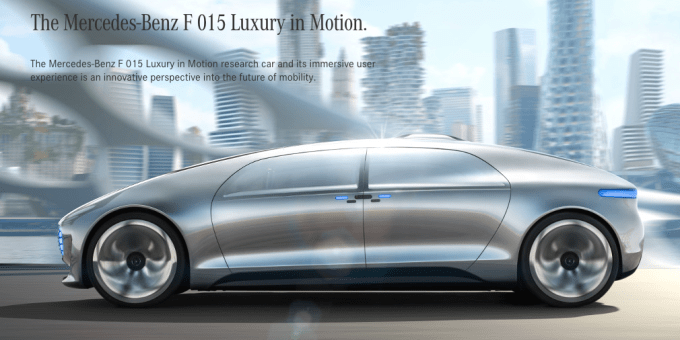

Mercedes Benz F 015 – concept autonomous car. This is just one vehicle — literally speaking. It’s a prototype that may never see the light of day on a production line, at least not for a long time. But I’m highlighting it here as a sign of the times. 2015 saw yet more vehicles, yet more automonous demos, and yet more moves from a range of players to plant their flags in the self-driving market. We’re still far from seeing any wide-scale commercial products or services for a number of reasons, but the amount of investment in this area continues to grow, with the hope and expectation that the tech, the consumer appetite, and regulations will all align in its favor. Other big news in automotive included Nvidia’s new car platforms.

Plus, too many selfie sticks.

CES 2016

Coming to a screen near you. Netflix has both spearheaded and in many ways led the focus on streamed video in the world of entertainment, and this year it used the show as a platform to announce a huge shift: It was expanding its service to 130 countries, a massive international push. While there are a lot of localized players, and I’d argue that Netflix doesn’t have quite the right mix of local and general content abroad as it does in its home market (where the catalog is genuinely far bigger), this was a huge move that continues to set Netflix up for more growth in the future, perhaps via consolidation.

As automotive tech continued to be a major theme at CES, there was a new twist this year. One of the huge automotive giants, GM, used the event not just to unveil a new electric car model, but also a $500 million investment in one of the on-demand transportation giants, Lyft. The rationale: to tap into what might be a new market to supply with its future autonomous vehicles, and to get a stake in one of the bigger startups transforming how people get from A to B (which will ultimately have an impact on how, and if, they buy cars).

CES 2017

Alexa, Everywhere. While the Echo was launched back in 2015, and we saw the beginnings of Alexa-integrated devices and Echo-based hardware the year before by way of Amazon’s platform plays, it was really in 2017 that this trend became nearly all-pervasive, appearing not just in speakers made by third parties, but refrigerators and more. Given the strong recurring theme of home gadgets at the show, this has made Amazon a big presence at the huge event. It still raises the question, though, of how successful any of these integrations have been. We may have a lot of “connected” devices in our homes, including smart speakers, but how much are we really using them?

CES 2018

Google assistant, Everywhere. Not to be outdone by Amazon or Apple in years before, 2018 was the year that Google permeated CES with its own voice — or more specifically its voice bot, the Google Assistant. In addition to blanketing trains, billboards and more with Google slogans, slides and gumball machines, the company’s name and Assistant were brought up in connection with most of the event’s biggest hardware and software splashes, by way of integrations. It’s not a guarantee that this will actually get people to use it, but having the ubiquity makes it ever more convenient for those who do want to go that route. Will be interesting to see how and if this continues as a theme in 2020 and beyond. My guess is that simple messages of “it’s there” will not be enough to hold interest longer term.

CES 2019

LAS VEGAS, NEVADA – JANUARY 08: An attendee walks by the Huawei booth at CES 2019 at the Las Vegas Convention Center on January 8, 2019 in Las Vegas, Nevada. CES, the world’s largest annual consumer technology trade show, runs through January 11 and features about 4,500 exhibitors showing off their latest products and services to more than 180,000 attendees. (Photo by David Becker/Getty Images)

Bull in a China shop. The theme of how Chinese companies grow and operate in the US and other Western markets — two major vectors that are being buffeted by large political questions around tariffs and national security — has been a big one in the tech world for a while now. It was interesting to see it play out at CES last year. The event benefits from a massive amount of visitors from China, and also exhibitors, and so many eyes were on both. It played out in some big ways: Huawei downplayed its presence, and ZTE didn’t come at all. And Gary Shapiro, head of the Consumer Technology Association, which organizes CES, came out on the side of doing business with China, criticizing President Trump’s strategy. Given that Trump’s daughter Ivanka will be a keynote speaker this year, all will be watching how and if this theme will continue to develop or simply get swept under the rug.

Other themes last year included more advanced media streaming that moved further away from being tied to large and expensive middleware (the only expensive hardware that matters being the big TV screens), and a kind of truce in the voice assistant matrix: support for multiple assistants on single devices.

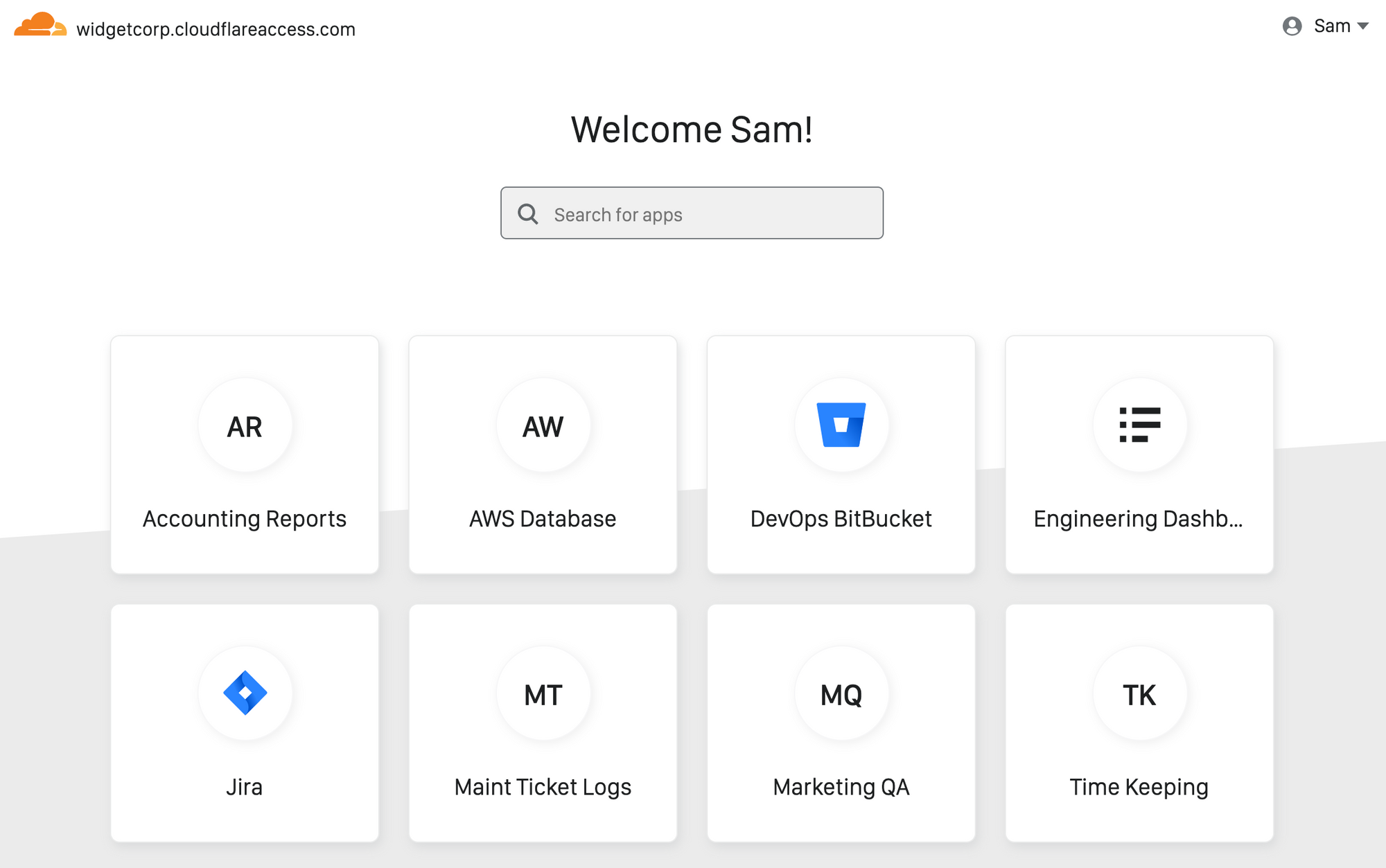

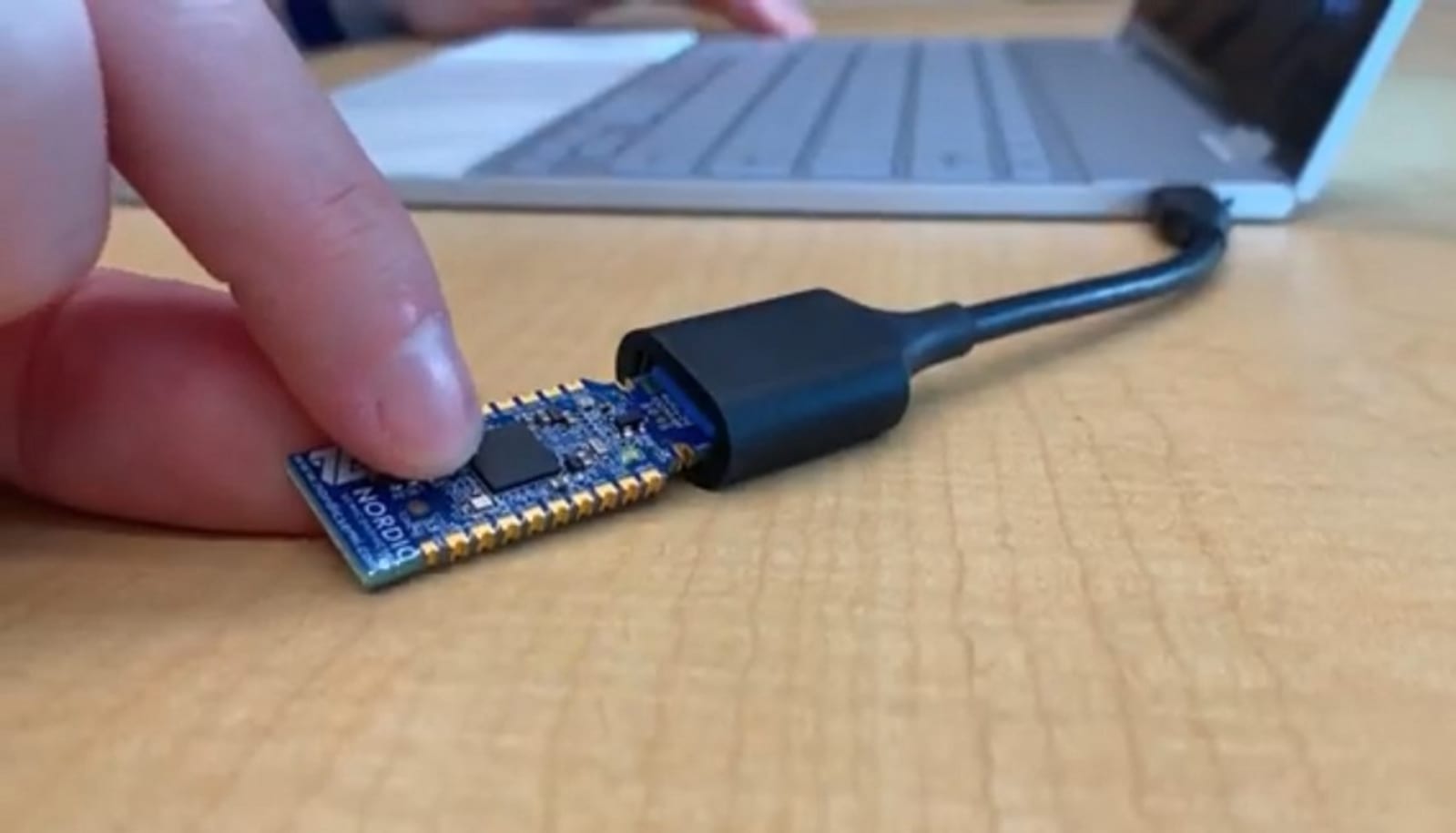

Security keys are designed to make logging in to devices simpler and more secure, but not everyone has access to them, or the inclination to use them. Until now. Today, Google has launched an open source project that will help hobbyists and hardware…

Security keys are designed to make logging in to devices simpler and more secure, but not everyone has access to them, or the inclination to use them. Until now. Today, Google has launched an open source project that will help hobbyists and hardware…

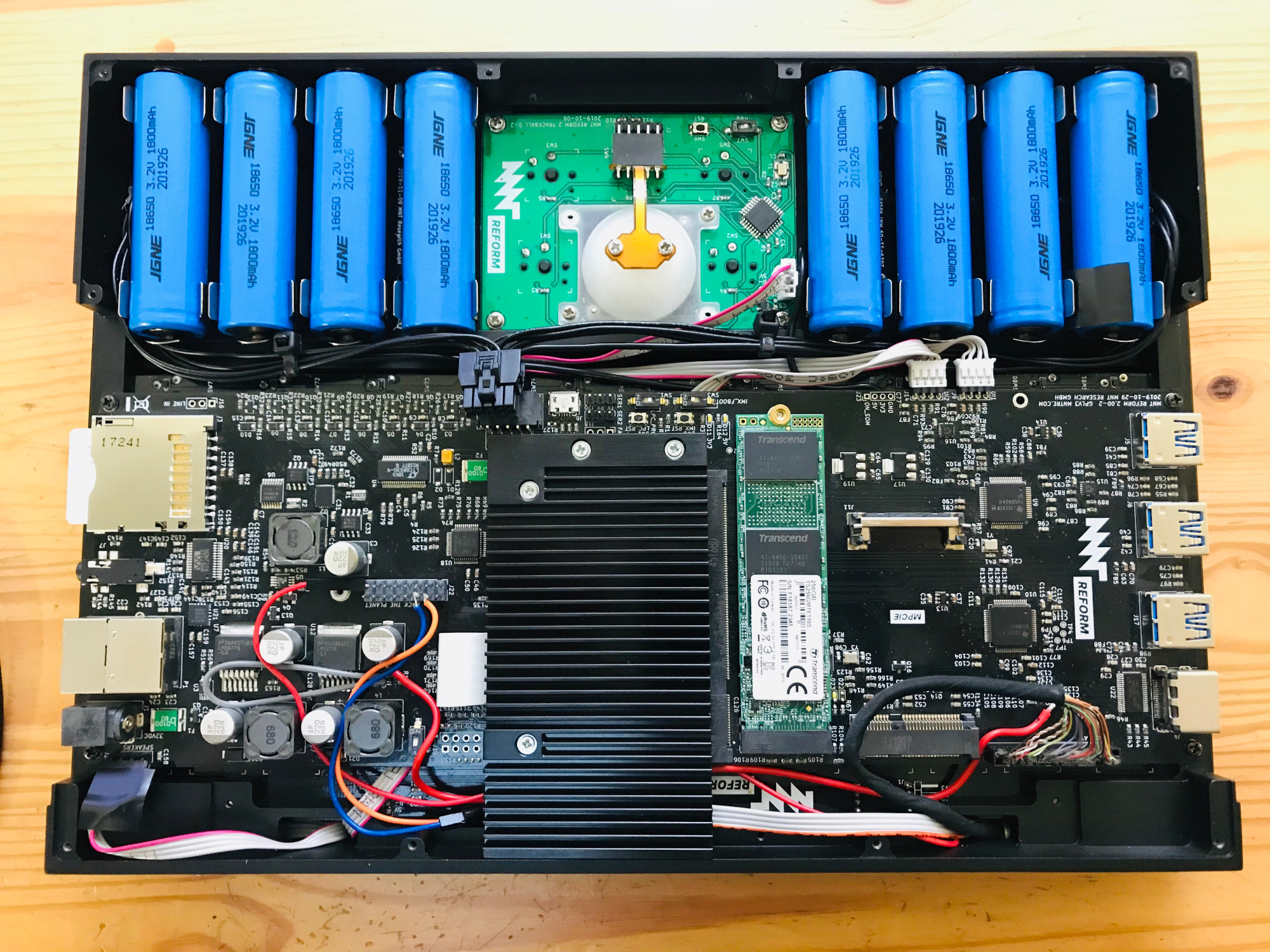

Since we started eagerly watching the Reform

Since we started eagerly watching the Reform

Shoppers at a UK mall have the opportunity to try out autonomous transport pods this week which — in a UK first — operate entirely without supervision. The driverless pods are being tested at the Cribbs Causeway mall in Gloucestershire, and run bet…

Shoppers at a UK mall have the opportunity to try out autonomous transport pods this week which — in a UK first — operate entirely without supervision. The driverless pods are being tested at the Cribbs Causeway mall in Gloucestershire, and run bet…

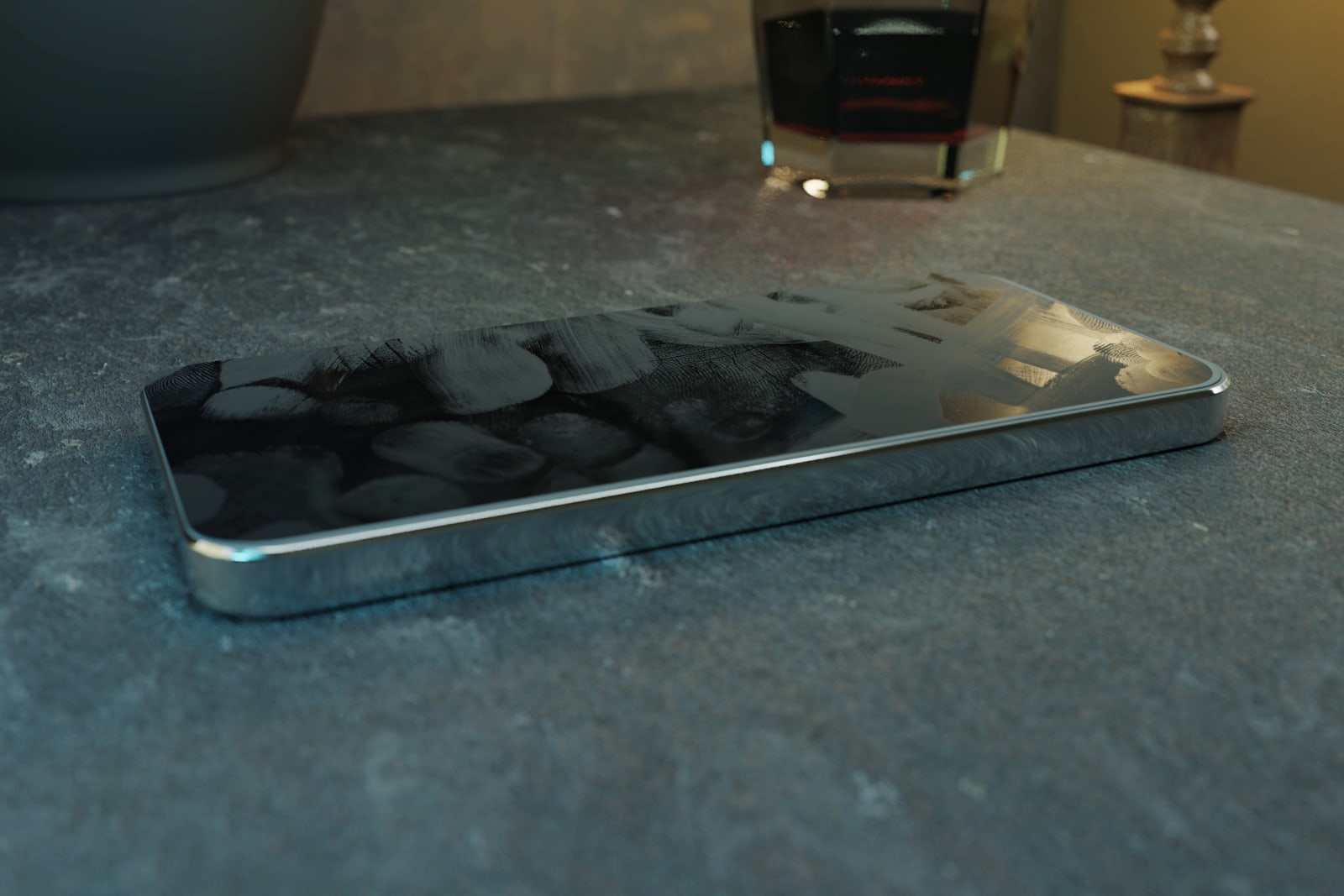

Face it, your phone screen is filthy. Think about all those times you texted from the toilet or scrolled through Instagram while riding the subway — those streaks on your screen aren't just schmutz, they're breeding grounds for bacteria. But that's…

Face it, your phone screen is filthy. Think about all those times you texted from the toilet or scrolled through Instagram while riding the subway — those streaks on your screen aren't just schmutz, they're breeding grounds for bacteria. But that's…