The content below is taken from the original ( Proving that Teams Retention Policies Work), to continue reading please visit the site. Remember to respect the Author & Copyright.

Teams Retention

In April 2018, Microsoft introduced support for Teams as a workload processed by Office 365 retention policies. The concept is simple. Teams captures compliance records for channel conversations and private chats in Exchange Online mailboxes, including messages from guest and hybrid users.

When you implement a Teams retention policy, the Exchange Managed Folder Assistant (MFA) processes the mailboxes to remove the compliance records based on the policy criteria. The creation date for a compliance record in a mailbox is used to its assess age for retention purposes. Given that Office 365 creates compliance records very soon after users post messages in Teams, the creation date for a compliance record closely matches the original message in Teams.

A background synchronization process replicates the deletions to the Teams data service on Azure, and eventually the deletions show up in clients.

A Pragmatic Implementation

If you were to design a retention mechanism from scratch, you might not take the same approach. However, the implementation is pragmatic because it takes advantage of existing components, like MFA. The downside is that because so many moving parts exist, it’s hard to know if a retention policy is having the right effect.

Setting a Baseline

Before a retention policy runs against a mailbox, we need to understand how many Teams compliance items it contains. This command tells us how many compliance items exist in a mailbox (group or personal) and reveals details of the oldest and newest items in the “Team Chat” folder.

Get-MailboxFolderStatistics -Identity "HydraProjectTeam" -FolderScope ConversationHistory -IncludeOldestAndNewestItems | ? {$_.FolderType -eq “TeamChat”} | ft name, Itemsinfolder, Newestitemreceiveddate, Oldestitemreceiveddate

Name ItemsInFolder NewestItemReceivedDate OldestItemReceivedDate

---- ------------- ---------------------- ----------------------

Team Chat 227 2 Aug 2018 16:10:41 11 Mar 2017 15:41:34

MFA Processes a Mailbox

After you create a Teams retention policy, Office 365 publishes details of the policy to Exchange Online and the Managed Folder Assistant begins to process mailboxes against the policy. MFA operates on a workcycle basis, which means that it tries to process every mailbox in a tenant at least once weekly. Mailboxes with less than 10 MB of content are not processed by MFA because it’s unlikely that they need the benefit of a retention policy. This is not specific to Teams, it’s just the way that MFA works.

When MFA processes a mailbox, it updates some mailbox properties with details of its work. We can check these details as follows:

$Log = Export-MailboxDiagnosticLogs -Identity HydraProjectTeam -ExtendedProperties

$xml = [xml]($Log.MailboxLog)

$xml.Properties.MailboxTable.Property | ? {$_.Name -like "ELC*"}

Name Value

---- -----

ElcLastRunTotalProcessingTime 1090

ElcLastRunSubAssistantProcessingTime 485

ElcLastRunUpdatedFolderCount 33

ElcLastRunTaggedFolderCount 0

ElcLastRunUpdatedItemCount 0

ElcLastRunTaggedWithArchiveItemCount 0

ElcLastRunTaggedWithExpiryItemCount 0

ElcLastRunDeletedFromRootItemCount 0

ElcLastRunDeletedFromDumpsterItemCount 0

ElcLastRunArchivedFromRootItemCount 0

ElcLastRunArchivedFromDumpsterItemCount 0

ELCLastSuccessTimestamp 02/08/2018 16:46:23

ElcFaiSaveStatus SaveSucceeded

ElcFaiDeleteStatus DeleteNotAttempted

Unfortunately, the MFA statistics don’t tell us how many compliance records it removed. If you run the same commands against a user mailbox, you’ll see the number of deleted items recorded in ElcLastRunDeletedFromRootItemCount. This doesn’t happen for Teams compliance records, perhaps because Exchange regards them as system items.

Compliance Items are Removed

Because MFA doesn’t tell us how many items it removes, we have to check the mailbox again. This time we see that the number of compliance records has shrunk from 227 to 3 and that the oldest item in the folder is from 20 July 2018. Given that users can’t access the Team Chat folder with clients like Outlook or OWA, the only way that items are removed is with a system process, so we can therefore conclude that MFA has done its work.

Get-MailboxFolderStatistics -Identity "HydraProjectTeam" -FolderScope ConversationHistory -IncludeOldestAndNewestItems | ? {$_.FolderType -eq “TeamChat”} | Format-Table Name, Itemsinfolder, Newestitemreceiveddate, Oldestitemreceiveddate

Name ItemsInFolder NewestItemReceivedDate OldestItemReceivedDate

---- ------------- ---------------------- ----------------------

Team Chat 3 2 Aug 2018 16:10:41 20 Jul 2018 08:17:09

Synchronization to Teams

Background processes replicate the deletions made by MFA to Teams. It’s hard to predict exactly when items will disappear from user view because two things must happen. First, the items are removed from the Teams data store in Azure. Second, clients synchronize the deletions to their local cache.

In tests that I ran, it took between four and five days for the cycle to complete. For example, in the test reported above, MFA ran on August 2 and clients picked up the deletions on August 7.

You might not think that such a time lag is acceptable. Microsoft agrees, and is working to improve the efficiency of replication from Exchange Online to Teams. However, for now, you should expect several days to lapse before the effect of a retention policy is seen by clients.

The Effect of Retention

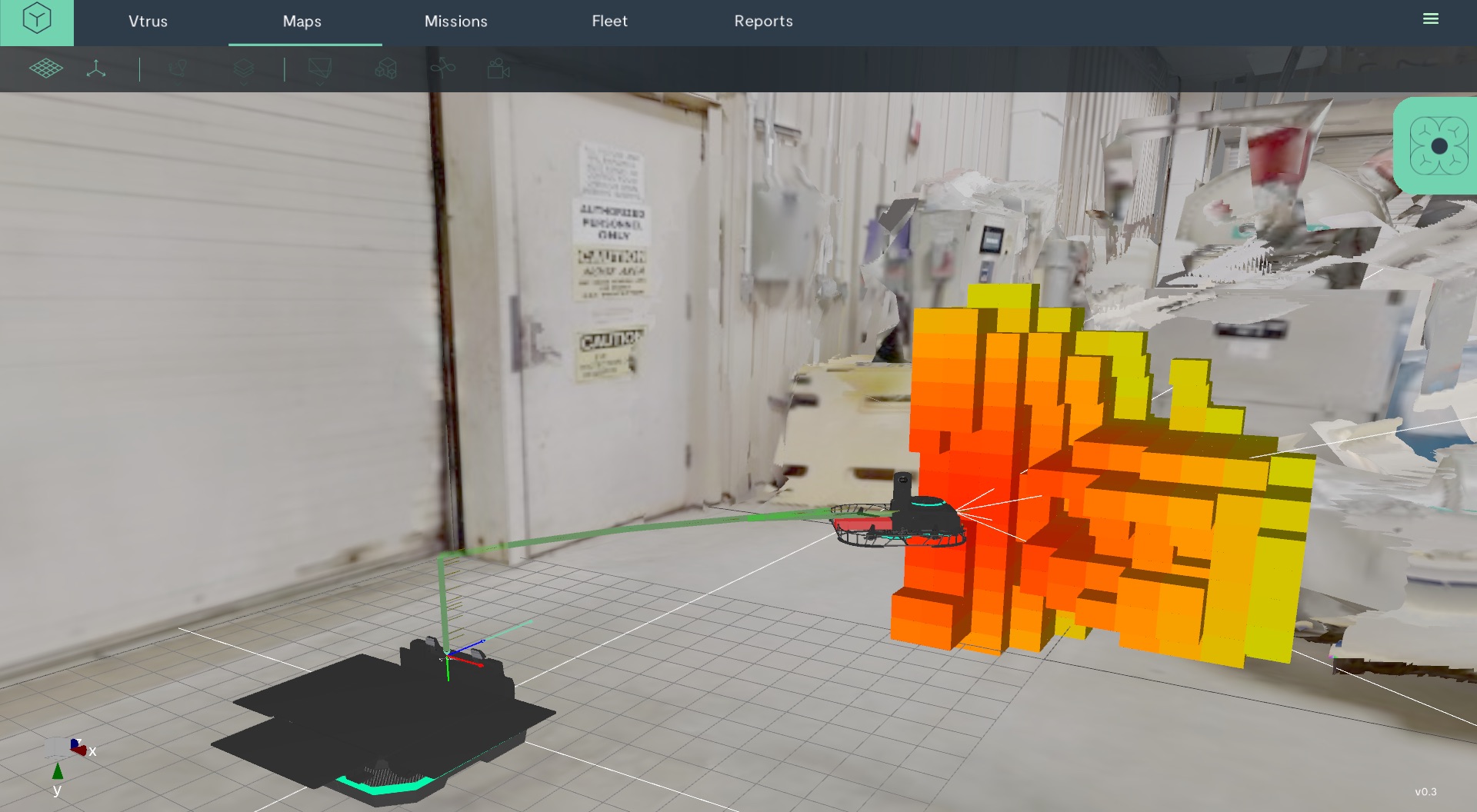

Figure 1 shows a channel after a retention policy removed any item older than 30 days. What’s immediately obvious is that some items older than 30 days are still visible. One is an item (Oct 25, 2017) created by an RSS connector to post notifications about new blog posts in the channel. The second (March 3, 2018) is from a guest user. The other visible messages are system messages, which Teams does not capture for compliance purposes.

Figure 1: Channel conversations after a retention policy runs (image credit: Tony Redmond)

The reason why the RSS item is still shown in Figure 1 is that items created in Teams by Office 365 connectors were not processed for compliance purposes until recently. They are now, and the most recent run of MFA removed the connector items. It is possible that Office 365 might fail to ingest some older items, in which case they will linger in channels because compliance records don’t exist.

We also see an old message posted by a guest user. Teams only began capturing hybrid user messages in January 2018, with an intention to go back in time for earlier messages as resources allow. Teams uses the same mechanism to capture guest user messages, but obviously Microsoft hadn’t processed back this far when I ran these tests. Other messages posted by guest users are gone because compliance records existed for these messages.

It’s worth noting that compliance records for guest-to-guest 1×1 chats are not processed by MFA. This is because MFA cannot access the phantom mailboxes used by Exchange to capture compliance records for the personal chats of guest and hybrid users. Guest contributions to channel conversations are processed because these items are in group mailboxes.

Some Tracking Tools Would be Useful

The Security and Compliance Center will tell you that a retention policy for Teams is on and operational (Status = Success), but after that an information void exists as to how the policy operates, when teams are processed, how many items are removed from channels and personal chats, and so on. There are no records in the Office 365 audit log and nothing in the usage reports either. All you can do is keep an eye on the number of compliance records in mailboxes.

Follow Tony on Twitter @12Knocksinna.

Want to know more about how to manage Office 365? Find what you need to know in “Office 365 for IT Pros”, the most comprehensive eBook covering all aspects of Office 365. Available in PDF and EPUB formats (suitable for iBooks) or for Amazon Kindle.

The post Proving that Teams Retention Policies Work appeared first on Petri.

A digital studio called Hello Velocity has created a typeface that embraces well-known corporate logos and is still somehow far less annoying than Comic Sans. The studio says it creates "thought-provoking internet experiences," and its Brand New Roma…

A digital studio called Hello Velocity has created a typeface that embraces well-known corporate logos and is still somehow far less annoying than Comic Sans. The studio says it creates "thought-provoking internet experiences," and its Brand New Roma…

Microsoft is removing limits on the number of devices on which some Office 365 subscribers can install the apps. From October 2nd, Home users will no longer be restricted to 10 devices across five users nor will Personal subscribers have a limit of o…

Microsoft is removing limits on the number of devices on which some Office 365 subscribers can install the apps. From October 2nd, Home users will no longer be restricted to 10 devices across five users nor will Personal subscribers have a limit of o…