The content below is taken from the original ( Happy 10th Birthday Hyper-V!), to continue reading please visit the site. Remember to respect the Author & Copyright.

In this post, I will look back on the history of Hyper-V, and look to the future of Microsoft’s virtualization.

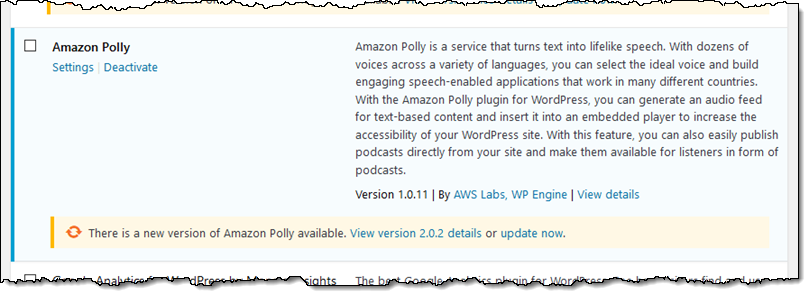

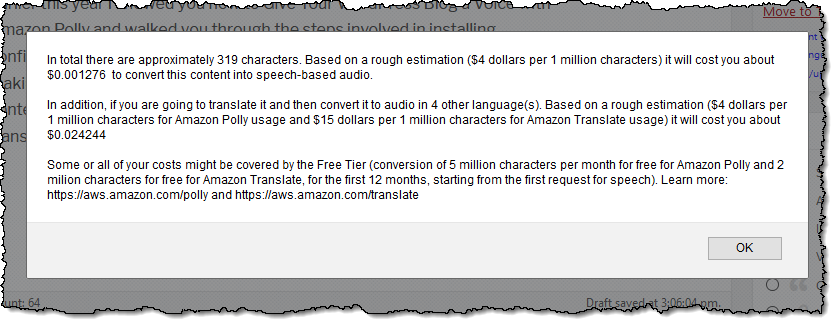

![Happy 10th Birthday Hyper-V [Image Credit: Jeff Woolsey, @WSV_GUY]](https://www.petri.com/wp-content/uploads/2018/06/Hyper-V10YearsOldCake.jpg)

Happy 10th Birthday Hyper-V [Image Credit: Jeff Woolsey, @WSV_GUY]

It All Started With An Acquisition

On February 19, 2003, Microsoft announced that the corporation has acquired a privately held virtualization vendor called Connectix. It might not have seemed like it then, but this acquisition was the genesis of something huge in the IT world, and I’m not limiting that to just Hyper-V.

Two Connectix products, Virtual PC and Virtual Server, were brought into the Microsoft portfolio. After brief betas, both products were made available … for purchase. At the time, I was running the Microsoft infrastructure for an international finance company. I found myself needing more capacity for underutilized hardware, and virtualization made sense. My test lab, a duplicate of core production systems, ran on the beta of Virtual Server 2005 and I used Virtual PC on our desktops for smaller scale work such as application distribution. On day 1 of general availability, I contacted our licensing provider and bought 2 copies of Virtual Server and 3 copies of Virtual PC – one of those Virtual Server copies went into production and we started a re-deployment of physical machines to a single host.

Microsoft went on to make Virtual Server and Virtual PC free products. Virtual Server 2005 R2 was released and Virtual Server 2005 R2 SP1 followed. These products filled a gap for Microsoft. Meanwhile, a small company called VMware was busy becoming a big company. Publicly, Microsoft pushed Virtual Server. In the background, they were working on “codename Viridian” which would become Windows Server 2008 Hyper-V.

The above photo by Jeff Woolsey, Principal Program Manager, Windows Server/Hybrid Cloud, was taken at the product team party. It mentions some other code names that I am unfamiliar with: vCode and Vertiflex. If you know them, please share in the comments below!

Windows Server 2008

The beta and launch of Windows Server 2008 was the first time I had a “non-customer” relationship with Microsoft. I was working with a hosting company, building a “next generation” platform on blade servers, SAN, and VMware software. I was invited to attend a series of boot camps and found myself engaging with the Microsoft IT pro evangelists. We were focused on VMware at work, but Hyper-V caught my interest. I was a huge fan of System Center back then, thinking that if I ever built a green/grey field infrastructure again, System Center Operations Manager would be the third thing I would build after domain controllers and WSUS. I looked at Hyper-V and wondered about the integrations that Microsoft could bring like no one else, but it was a tech I would not use.

Things changed and I found myself with a start-up company and Hyper-V was our choice of hypervisor. This was the first time Microsoft would release an enterprise-class hypervisor. Even in the beta releases, it performed well. I built a physical infrastructure on the beta, awaiting the GA release, which came after the release of WS2008. I can remember the sunny evening, pressing F5, waiting for the download link, which I promptly put into production that night.

The performance lived up to expectations, but this was very much a v1.0 release. Some improvement was needed. I can remember the first time I spoke at an event about Hyper-V. The room was full of “vFanboys” and I left the room black-and-blue about the lack of Live Migration (vMotion) or Dynamic Memory in Hyper-V.

Typical of Microsoft back then, the release of WS2008 wasn’t even out the door before the planning for WS2008 R2 had started.

Windows Server R2

Live Migration. That’s the one thing I remember most about this release. Finally, we had the ability to move a virtual machine from one clustered host to another with no apparent downtime. I know that there were lots more features, but this was the Live Migration release.

This was when small/medium businesses started to discover virtualization – Microsoft effectively made it free if your virtual machines were licensed for the current version of Windows Server. WS2008 was a curiousity, but WS2008 R2 exploded the consumption of Hyper-V. My bet is that there are still huge numbers of WS2008 R2 hosts out there that were never upgraded.

A Service Pack 1 release followed and added another significant feature: Dynamic Memory. With DM enabled, a virtual machine only consumed what physical memory, plus a buffer, that it need for current usage. I personally found this to be hugely beneficial and I think it helped with the marketing of Hyper-V, but I wonder how many end customers actually turned it on in production.

Windows Server 2012

The Sinofsky era was in full swing. Microsoft was in full “copy Apple” mode – which wasn’t great. I decided to attend the first Build conference. Officially, it was supposed to be a Windows 8 announcement event, but two things convinced me that there would be Server content:

- The shared core OS where yper-Hyper-V lives (under the kernel)

- There was some language about Window Server that I recognized

And yes, there was plenty of Windows Server content to keep me happy. Windows Server was focused on large enterprises and hosting companies with a huge number of improvements. What stood out for me were:

- Maturity of clustering: The storage & clustering team did a lot of work to improve operations and high availability.

- Networking: Converged networking, hardware offloads, and SMB 3.0 were huge changes. SMB 3.0 became Microsoft’s data center data transfer protocol. NVGRE provided software-defined networking, which powered the virtual networking of Azure.

- Software-defined storage: Storage Spaces was introduced, eventually evolving into the foundation of Storage Spaces Direct in Azure Stack.

- Live Migration for everyone: LM was no longer just for those with a cluster, although Storage Spaces made clustering much more affordable. Live Migration became a standard Hyper-V feature and was possible with non-clustered hosts too.

I spoke at the UK launch of Windows Server 2012. What struck me was the incredible interest in Hyper-V from Fortune 500s. Myself and another Irish MVP, Damian Flynn, set up whiteboards in the expo hall and were busier than any of the exhibitors. At the end of the day, we had a huge crowd semi-circling us and the venue staff had to kick us out to close the hall down!

That was cool, but a significant potential customer decided that Hyper-V was ready for the bigtime. This customer had developed their own hypervisor called Red-Dog but WS2012 convinced them to switch. And it was then, the Microsoft Azure switched to Hyper-V.

Windows Server 2012 R2

This release was a maturation of WS2012 Hyper-V – bigger customers were using Hyper-V in scaled out and multi-location deployments and the data and feedback helped improve the product. Once again, there was a laundry list of improvements.

Lots of those improvements were “little” things that are the sort of changes that you don’t notice, but the effects are hugely beneficial. I can remember having lunch with some PMs and they were giddy with the ideas of technical demos that they could show because of the sheer quantity of new features.

Clustering started on the path of saying “not every glitch is worth a failover” because failovers can be more disruptive than a glitch. Live Migration got faster with compression. SMB 3.0 got a larger role with Live Migration leveraging it to enable migrations using multiple high speed NICs. Port ACLs, probably used by a handful of people in a lab, enabled firewall rules in the virtual switch. Most of all, Linux became a first class citizen – live VM backup for Linux was introduced into the Linux kernel by Microsoft.

If you review the list of features, you might wonder who might benefit from them. Anyone using Azure probably is. RDMA is used in Azure. Converged networking is used in Azure. Port ACLs probably power Network Security Groups (NSGs). NVGRE and the hardware offloads, combined with Mellanox components which are used in Azure, improve networking performance.

Windows Server 2016

I look back on this release with a certain level of satisfaction. Right after the GA of WS2012 R2, Microsoft reached out to customers like I had never seen before. Customer feedback combined with Microsoft’s vision drove Hyper-V (and related roles/features) in WS2016.

Once of the big challenges I observed was the number of older loyal customers who were “stuck” on WS2008 R2 clusters that couldn’t be upgraded to something newer. Failover Clustering just didn’t support an in-place upgrade of any kind – you had to do a “swing migration” to a new cluster. WS2016 gave us a rolling cluster upgrade that worked beautifully. Hardware offloads improved performance again. Discrete Device Assignment allows virtual machines to connect directly to hardware (N-Series virtual machines in Azure). Hyper-V Manager finally got some improvements to help day-to-day operations.

Two big asks over the years were also addressed:

- Hot-add and -remove of NICs was added.

- A new software-defined backup system with built-in resilient change tracking finally stabilized the backup of Hyper-V virtual machines.

I’ve discussed the flow of features from Hyper-V into Azure. But that flow started to work the other way too. Host Resource Protection, winding down CPU resources to virtual machines that attack the host, and Network Controller (software-defined orchestration and management/security features) came from Azure … some of the code was literally copy/pasted thanks to the shared platform.

Another thing that came from Azure was Nano Server. Nano, a tiny version of Windows with no admin command interface, originated in Azure. It was supposed to replace Windows Server Full Desktop and Server Core in platform roles such as Hyper-V hosts and storage servers. It seemed cool, but the deployment system was like something from the 1970s and it was a nightmare to own. After a hard push, Microsoft eventually admitted defeat, and Nano’s future lay in a different feature of WS2016.

Docker had become “a thing”. When people talk about “Docker” they’re normally talking about Containers, a new form of virtualization (OS instead of machine) that can be managed by Docker, or other tools such as Kubernetes. In the early days of WS2016, Microsoft reached out to Docker and formed a partnership. The Hyper-V team, which spun off a Containers team, built Containers for Windows Server with Docker management. But they also built a more secure version of it based on Hyper-V partitions called Hyper-V Containers. A Hyper-V container, designed for untrusted code, requires a mini-kernel and nothing in the Windows portfolio is smaller than Nano Server – so this became the future of Nano Server.

This era of WS2016 and Windows 10 saw Hyper-V find new use cases. The partitions of Hyper-V provide a secure barrier between the parent partition (historically, the management/host OS) and child partitions (historically, the virtual machine). Windows 10 (and WS2016) got Credential Guard, allowing LSASS to run in a child partition. If malware tries to scan for passwords on the physical machine, it will find none because they are behind the barrier of Hyper-V partitioning. Windows 10 Application Guard uses the same technology to protect Edge, constraining any malware ran by the browser to the boundaries of the container, and isolated from the PC’s OS. This leveraging of partitions continues, more recently enabling Android to be emulated on Windows 10 for application developers.

Semi-Annual Channel

Microsoft recently started releasing Windows Server in a similar pattern to Windows 10. These Semi-Annual Channel (SAC) released come in the Spring and Autumn/Fall, and are named after the year and approximate month of release, such as 1709, 1803, and so on. Customers with Software Assurance that require frequent upgrades can opt into this release schedule to get the very latest features, but they have to do so with caution – some pieces might not be improved (or temporarily disappear) until the next release.

Two WS2016 features that have seen rapid improvement in the SAC releases are:

- Hyper-converged infrastructure: You don’t need Nutanix to do HCI. Hyper-V can be deployed on commodity hardware using Storage Spaces Direct (S2D) and leverage amazing network and storage hardware to deliver crazy speeds. My employer started distributing a PCIe flash storage drive that can deliver 1 million 4K read IOPS!

- Containers: Microsoft is all-on on Containers, building out functionality for Windows and Linux, Docker and Kubernetes.

Abandon-Ware

There are those who consider Windows Server to be abandon-ware. I guess it’s not helped by some members of the media that aren’t fully familiar with Windows Server. But the real blame for this goes to Microsoft itself. Windows Server didn’t even get mentioned during the keynotes of the last Microsoft Ignite. I don’t think any subsidiary has done a real launch since WS2012. Local Microsoft staff have sales targets, and Windows Server is a steady cash cow. Growth targets are focused on cloud services such as CRM 365, Office 365, and Azure, so local Microsoft reps only talk about those products. Even the staffing of subsidiaries reflects this – I doubt there’s a person in my local Microsoft Office who could tell me anything about the features of WS2016, and the reorg of last year was entirely focused on cloud services.

Is Windows Server dead?

In one word: No.

Windows Server has a huge market that will never go away. Some workloads can never go to the cloud. Microsoft hopes they will migrate to Azure Stack (which is powered by Windows Server Hyper-V) but Azure Stack will remain a niche, albeit a valuable one. And even those who migrate to the cloud, they’re either bringing their Windows Server licenses with them or getting new ones – that’s why Microsoft is adding two new ways to acquire Windows Server via their Cloud Solution Provider partner channel.

Windows Server 2019 is an accumulation of features of the SAC releases since WS2016 with some more. Today, you can participate in the preview via Windows Server Insiders. There are lots of new things and improvements, as were shared in the recent Windows Server Summit online event – it appears that this was so successful that the event will become a recurring one.

Another significant sign is Windows Admin Center (WAC). The built-in and Remote Server Administration Toolkit are built on MMC.EXE which has been deprecated for several generations of Windows. WAC was made generally available this year and has been going through cloud-speed improvements. Using this new shared HTML5 experience, you can manage your Windows servers, with growing support for Hyper-V, clustering, and other features. And yes, it does offer extensions into Azure.

Wrap Up

Hyper-V has a rich history – sometimes colorful. It’s been a great ride … and even if my job’s focus has switched mainly to Microsoft Azure, my knowledge of Hyper-V has made that switch easier, and it’s great to know that I work on the biggest Hyper-V clusters there are.

I’ve gotten to know several of the program managers of Hyper-V, Storage & Clustering, and Networking over the years. Every one of them has struck me as being intelligent, receptive to feedback, and ambitious to make their product they very best. They’re proud of their work, and rightly so.

Happy birthday Hyper-V, and thank you the team and your neighbors in the Redmond campus, past and present.

The post Happy 10th Birthday Hyper-V! appeared first on Petri.

Car trouble on the motorway is frustrating enough by itself, let alone if you don't have a roadside assistance plan to ease your worries. Waze may soon help get you out of a jam, though. The Google-owned navigation app is teaming up with Allianz Part…

Car trouble on the motorway is frustrating enough by itself, let alone if you don't have a roadside assistance plan to ease your worries. Waze may soon help get you out of a jam, though. The Google-owned navigation app is teaming up with Allianz Part…

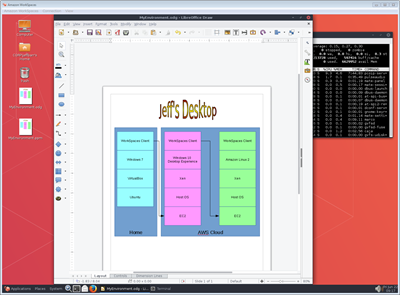

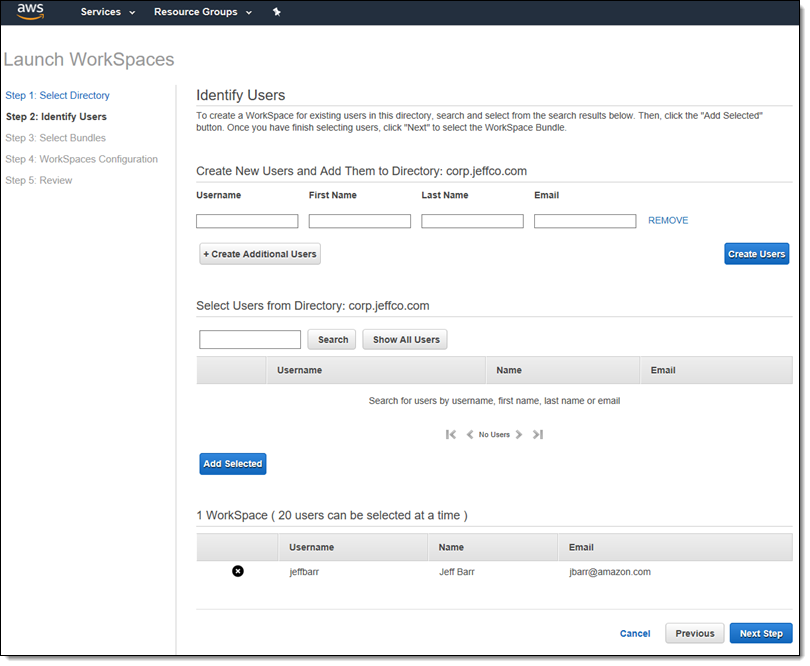

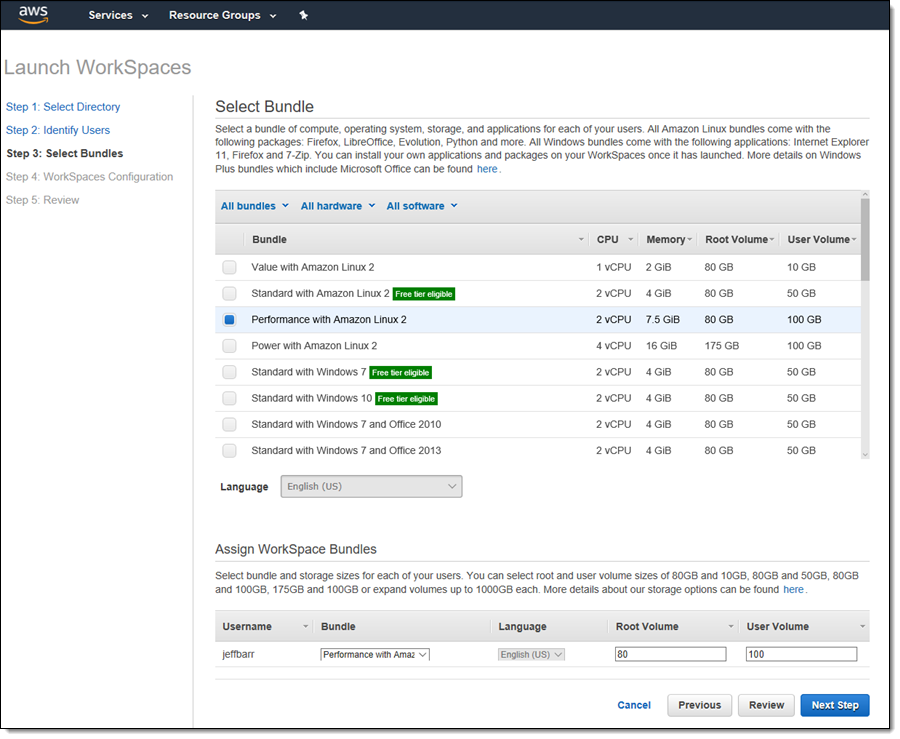

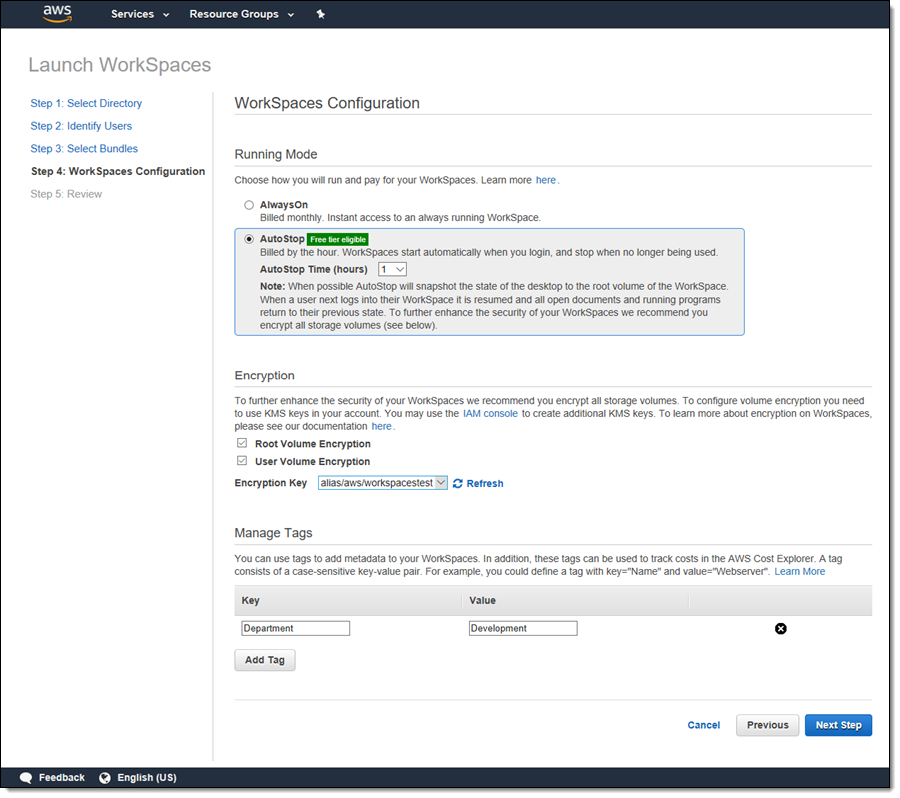

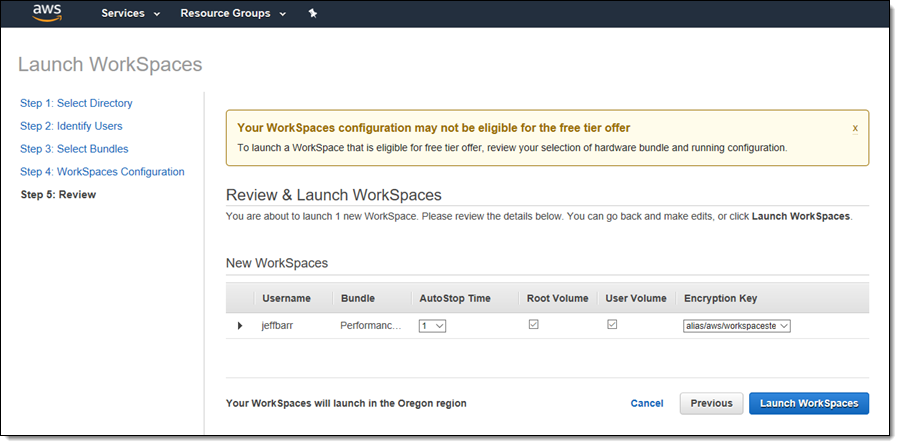

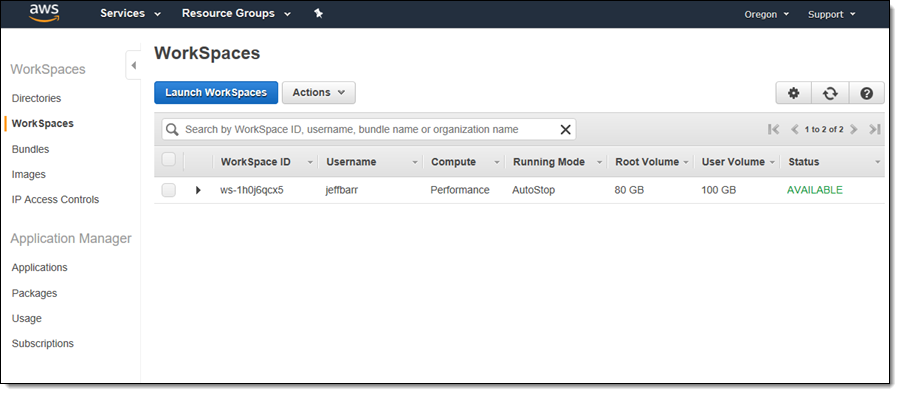

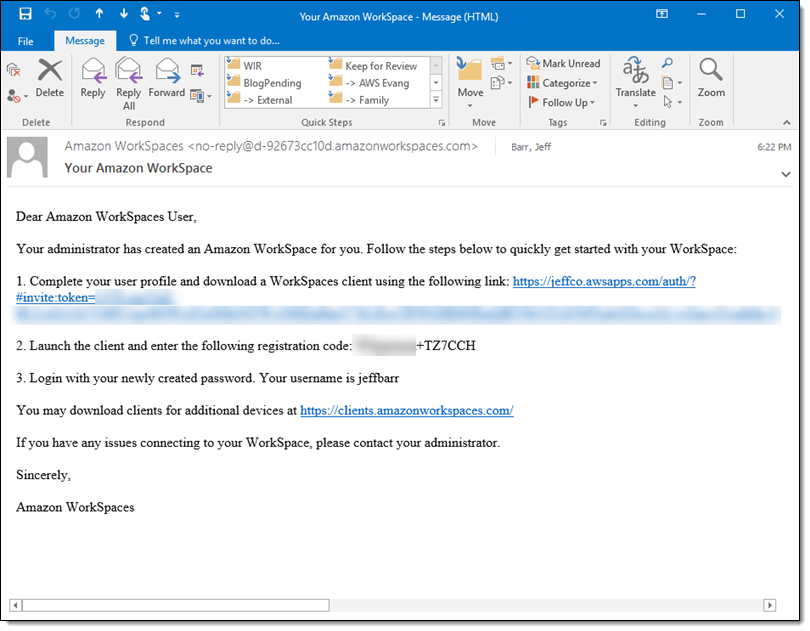

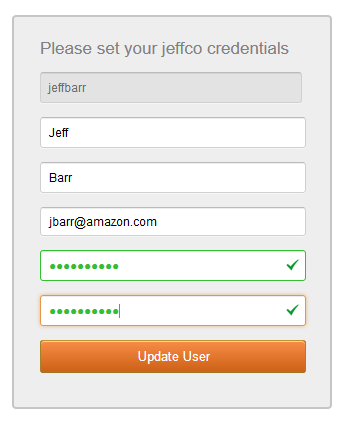

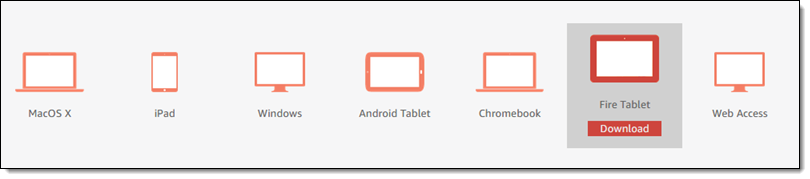

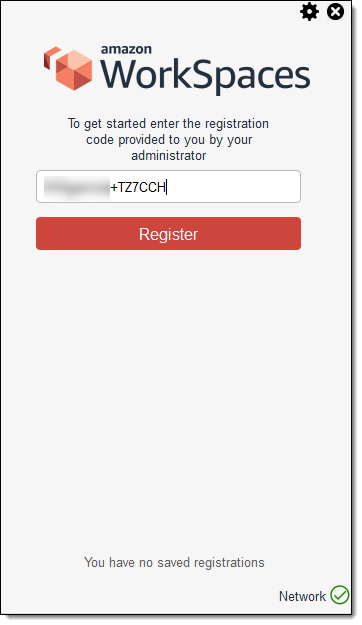

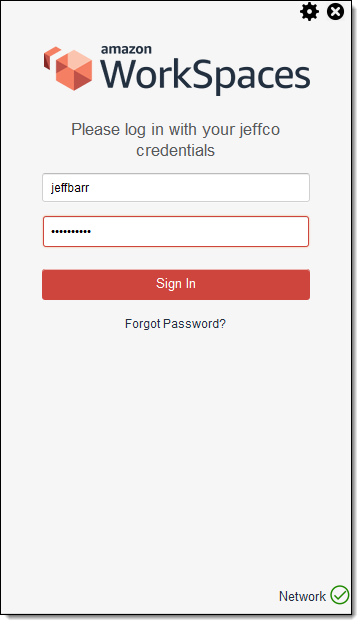

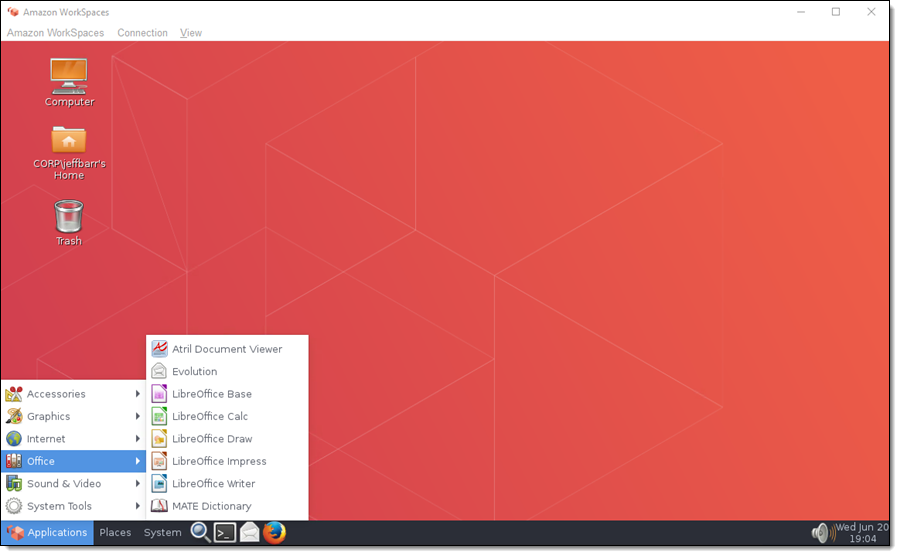

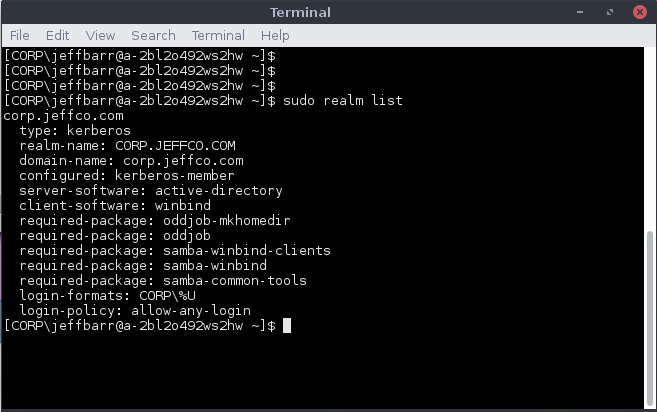

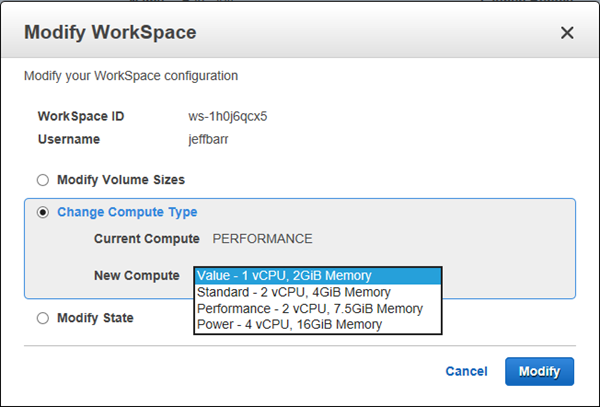

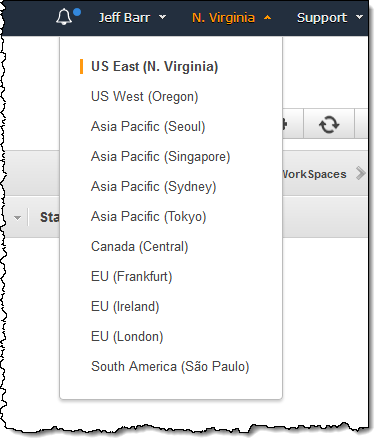

Today we are giving you another desktop option! You can now launch a WorkSpace that runs

Today we are giving you another desktop option! You can now launch a WorkSpace that runs

![Network World [slideshow] - Top 10 Supercomputers 2018 [slide-01]](https://images.idgesg.net/images/article/2018/06/nw_ss_top_ten_supercomputers_2018_slide_01_1200x800-100762093-large.jpg)

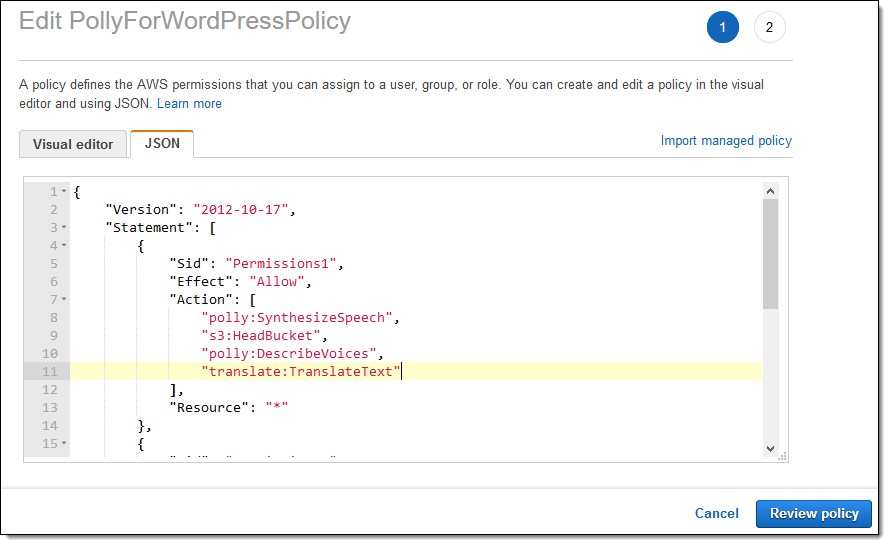

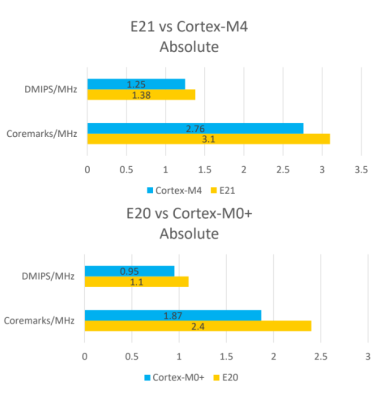

The first chip from SiFive was the HiFive 1, which was based on the SiFive E31 CPU.

The first chip from SiFive was the HiFive 1, which was based on the SiFive E31 CPU.