The content below is taken from the original ( Password Rotation for Windows on Amazon EC2 Made Easy with EC2Rescue), to continue reading please visit the site. Remember to respect the Author & Copyright.

EC2Rescue for Windows is an easy-to-use tool that you run on an Amazon EC2 Windows Server instance to diagnose and troubleshoot possible problems. A common use of the tool is to reset the local administrator password.

Password rotation is an important security task in any organization. In addition, setting strong passwords is necessary to ensure that the password doesn’t get hacked by brute force or dictionary attacks. However, these tasks become very challenging to perform manually, particularly when you are dealing with more than a few servers.

AWS Systems Manager allows you to manage your fleet remotely, and to run commands at scale using Run Command. The Systems Manager Parameter Store feature is integrated with AWS Key Management Service (AWS KMS). Parameter Store allows for string values to be stored encrypted, with granular access controlled by AWS Identity and Access Management (IAM) policies.

In this post, I show you how to rotate the local administrator password for your Windows instances using EC2Rescue, and store the rotated password in Parameter Store. By using Systems Manager Maintenance Window, you can then schedule this activity to occur automatically at a frequency of your choosing.

Overview

EC2Rescue is available as a Run Command document called AWSSupport-RunEC2RescueForWindowsTool. The option to reset the local administrator password allows you to specify which KMS key to use to encrypt the randomly generated password.

If your EC2 Windows instances are already enabled with Systems Manager, then the password reset via EC2Rescue Run Command happens online, with no downtime. You can then configure a Systems Manager maintenance window to run AWSSupport-RunEC2RescueForWindowsTool on a schedule (make sure your EC2 instances are running during the maintenance window!).

Workflow

In this post, I provide step-by-step instructions to configure this solution manually. For those of you who want to see the solution in action with minimal effort, I have created a CloudFormation template that configures everything for you, in the us-west-2 (Oregon) region.

Keep reading to learn what is being configured, or jump to the Deploy the solution section for a description of the template parameters.

Define a KMS key

First, you create a KMS key specifically to encrypt Windows passwords. This gives you control over which users and roles can encrypt these passwords, and who can then decrypt them. I recommend that you create a new KMS key dedicated to this task to better manage access.

Create a JSON file for the Key policy

In a text editor of your choosing, copy and paste the following policy. Replace ACCOUNTID with your AWS Account ID. Administrators is the IAM role name that you want to allow to decrypt the rotated passwords, and EC2SSMRole is the IAM role name attached to your EC2 instances. Save the file as RegionalPasswordEncryptionKey-Policy.json.

{

"Version": "2012-10-17",

"Id": "key-policy",

"Statement": [

{

"Sid": "Allow access for Key Administrators",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::ACCOUNTID:role/Administrators"

},

"Action": [

"kms:Create*",

"kms:Describe*",

"kms:Enable*",

"kms:List*",

"kms:Put*",

"kms:Update*",

"kms:Revoke*",

"kms:Disable*",

"kms:Get*",

"kms:Delete*",

"kms:ScheduleKeyDeletion",

"kms:CancelKeyDeletion"

],

"Resource": "*"

},

{

"Sid": "Allow decryption",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::ACCOUNTID:role/Administrators"

},

"Action": "kms:Decrypt",

"Resource": "*"

},

{

"Sid": "Allow encryption",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::ACCOUNTID:role/EC2SSMRole"

},

"Action": "kms:Encrypt",

"Resource": "*"

}

]

}

Create the key and its alias (user-friendly name)

Use the following CLI command to create a KMS key that the IAM role Administrators can manage, and that the IAM role EC2SSMRole can use for encryption only:

aws kms create-key \

–-policy file://RegionalPasswordEncryptionKey-Policy.json \

--description "Key used to encrypt local Administrator passwords stored in SSM Parameter Store."

Output:

{

"KeyMetadata":

{

"Origin": "AWS_KMS",

"KeyId": "88eea0b7-0508-4318-a0bc-feee4a5250a3",

"Description": "Key used to encrypt local Administrator passwords stored in SSM Parameter Store.",

(...)

}

}

Use the following CLI command to create an alias for the KMS key:

aws kms create-alias \

--alias-name alias/WindowsPasswordRotation-EncryptionKey \

--target-key-id 88eea0b7-0508-4318-a0bc-feee4a5250a3

Define a maintenance window

Use a maintenance window to schedule the password reset. Here is the CLI command to schedule such activity every Sunday at 5AM UTC:

aws ssm create-maintenance-window \

--name "windows-password-rotation" \

--schedule "cron(0 5 ? * SUN *)" \

--duration 2 \

--cutoff 1 \

--no-allow-unassociated-targets

Output:

{ "WindowId": "mw-0f2c58266a8c49246" }

Define a target

Use tags to identify which instances to reset the password. For example, you can reset all instances tagged with tag key Environment with value Production.

aws ssm register-target-with-maintenance-window \

--window-id "mw-0f2c58266a8c49246" \

--targets "Key=tag:Environment,Values=Production" \

--owner-information "Production Servers" \

--resource-type "INSTANCE"

Output:

{

"WindowTargetId": "a5fc445b-a7f1-4591-b528-98440832da41"

}

Define a maintenance window IAM role

These steps are only necessary if you haven’t configured a maintenance window before. Skip this section if you already have your IAM role with the AmazonSSMMaintenanceWindowRole AWS Managed Policy attached.

Create a JSON file for the role trust policy

In a text editor of your choosing, copy and paste the following trust policy. Save the file as AutomationMWRole-Trust-Policy.json.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ssm.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Create the IAM role and attach the Amazon managed policy for SSM maintenance window

Use the following CLI two commands to create the IAM role AutomationMWRole and associate the AmazonSSMMaintenanceWindowRole AWS Managed Policy.

aws iam create-role \

--role-name AutomationMWRole \

--assume-role-policy-document file://AutomationMWRole-Trust-Policy.json

aws iam attach-role-policy \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonSSMMaintenanceWindowRole \

--role-name AutomationMWRole

Define a task

EC2Rescue is available as a Run Command document called AWSSupport-RunEC2RescueForWindowsTool. This document allows you to run EC2Rescue remotely on your instances. One of the available options is the ability to reset the local administrator password. As the final configuration step, create a Run Command task that runs AWSSupport-RunEC2RescueForWindowsTool with the following parameters:

- Command = ResetAccess

- Parameters = KMS Key ID

Rotated passwords are saved in Parameter Store encrypted with the KMS key to use. Here is the CLI command using the KMS key, target, maintenance window and role that you previously generated:

aws ssm register-task-with-maintenance-window \

--targets "Key=WindowTargetIds,Values=a5fc445b-a7f1-4591-b528-98440832da41" \

--task-arn "AWSSupport-RunEC2RescueForWindowsTool" \

--service-role-arn "arn:aws:iam::ACCOUNTID:role/AutomationMWRole" \

--window-id "mw-0f2c58266a8c49246" \

--task-type "RUN_COMMAND" \

--task-parameters "{\"Command\":{ \"Values\": [\"ResetAccess\"] }, \"Parameters\":{ \"Values\": [\"88eea0b7-0508-4318-a0bc-feee4a5250a3\"] } }" \

--max-concurrency 5 \

--max-errors 1 \

--priority 1

Output:

{

"WindowTaskId": "a3571731-c64c-4e43-be8d-7b543942a179"

}

Your Windows instances need to be enabled for Systems Manager, and to have additional IAM permissions to be able to write to Parameter Store. You can accomplish this by adding the following policy to the existing IAM roles associated with your Windows instances:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ssm:PutParameter"

],

"Resource": [

"arn:aws:ssm:*:ACCOUNTID:parameter/EC2Rescue/Passwords/*"

]

}

]

}

Save the policy as EC2Rescue-ResetAccess-Policy.json. Here are the CLI commands to create a new IAM customer managed policy and attach it to an existing Systems Manager IAM role for EC2 (in this example, EC2SSMRole).

aws iam create-policy \

--policy-name EC2Rescue-ResetAccess-Policy \

--policy-document file://EC2Rescue-ResetAccess-Policy.json

aws iam attach-role-policy \

--policy-arn arn:aws:iam::ACCOUNTID:policy/EC2Rescue-ResetAccess-Policy \

--role-name EC2SSMRole

Deploy the solution

To simplify the deployment of this solution, I have created an AWS CloudFormation template that configures all the parts described earlier in this post, and deploys this solution in us-west-2 (Oregon).

These are the parameters that the template requires:

- Target

- Tag key to filter your instances

- Tag value to filter your instances

- Cron expression for the maintenance window

- Permissions

- Existing IAM role name you are using for your Systems Manager-enabled EC2 instances, which will be authorized to encrypt the passwords.

- Security

- Current IAM role name that you are using to deploy this CloudFormation template. The role is authorized to decrypt the passwords and manage the KMS key.

The following figure shows the CloudFormation console, with the default template parameters and the existing EC2 IAM role named EC2SSMRole, as well as the administrative IAM role Administrators, which you use to create the CloudFormation stack.

The deployment takes few minutes. Here is a sample maintenance window, which was last executed on October 29th 2017.

Parameter Store has an encrypted parameter for each production Windows instance. You can decrypt each value from the console if you have kms:decrypt permissions on the key used to encrypt the password.

Conclusion

In this post, I showed you how to enhance the security of an EC2 environment by automating secure password rotation. Passwords are rotated on a schedule, and these actions are logged in AWS CloudTrail. Passwords are stored in Parameter Store with a KMS key, so that you have granular control over who has access to the encrypted passwords with IAM policies.

As long as your EC2 Windows instances are running during the maintenance window and are configured to work with Systems Manager, the local administrator password is rotated automatically. Additional maintenance windows can be created for other environments, or new targets added to the existing maintenance window (such as development, staging, or QA environments).

About the Author

Alessandro Martini is a Senior Cloud Support Engineer in the AWS Support organization. He likes working with customers, understanding and solving problems, and writing blog posts that outline solutions on multiple AWS products. He also loves pizza, especially when there is no pineapple on it.

Alessandro Martini is a Senior Cloud Support Engineer in the AWS Support organization. He likes working with customers, understanding and solving problems, and writing blog posts that outline solutions on multiple AWS products. He also loves pizza, especially when there is no pineapple on it.

The Olympics aren't just an event for the most talented athletes to strut their stuff on the world's stage. No, The Games are where robots can find honest work and leisure, too. Some 85 robots (spread across 11 different models, humanoid and otherwis…

The Olympics aren't just an event for the most talented athletes to strut their stuff on the world's stage. No, The Games are where robots can find honest work and leisure, too. Some 85 robots (spread across 11 different models, humanoid and otherwis… The Internet of Things hasn't ever been super secure. Hacked smart devices have been blamed for web blackouts, broken internet, spam and phishing attempts and, of course, the coming smart-thing apocalypse. One of the reasons that we haven't seen the…

The Internet of Things hasn't ever been super secure. Hacked smart devices have been blamed for web blackouts, broken internet, spam and phishing attempts and, of course, the coming smart-thing apocalypse. One of the reasons that we haven't seen the…

Embark’s autonomous trucking solution just demonstrated what it could be capable of in a big way: It make a coast-to-coast trip from L.A. to Jacksonville, Florida, driving 2,400 miles

Embark’s autonomous trucking solution just demonstrated what it could be capable of in a big way: It make a coast-to-coast trip from L.A. to Jacksonville, Florida, driving 2,400 miles

Microsoft is targeting its cloud storage rivals including Dropbox, Box, and Google today by offering to essentially buy out customers’ existing contracts if they make the switch to OneDrive for Business. The company says that customers currently paying for one of these competitive solutions, can instead opt to use OneDrive for free for the remainder of their contract’s term. The…

Microsoft is targeting its cloud storage rivals including Dropbox, Box, and Google today by offering to essentially buy out customers’ existing contracts if they make the switch to OneDrive for Business. The company says that customers currently paying for one of these competitive solutions, can instead opt to use OneDrive for free for the remainder of their contract’s term. The…

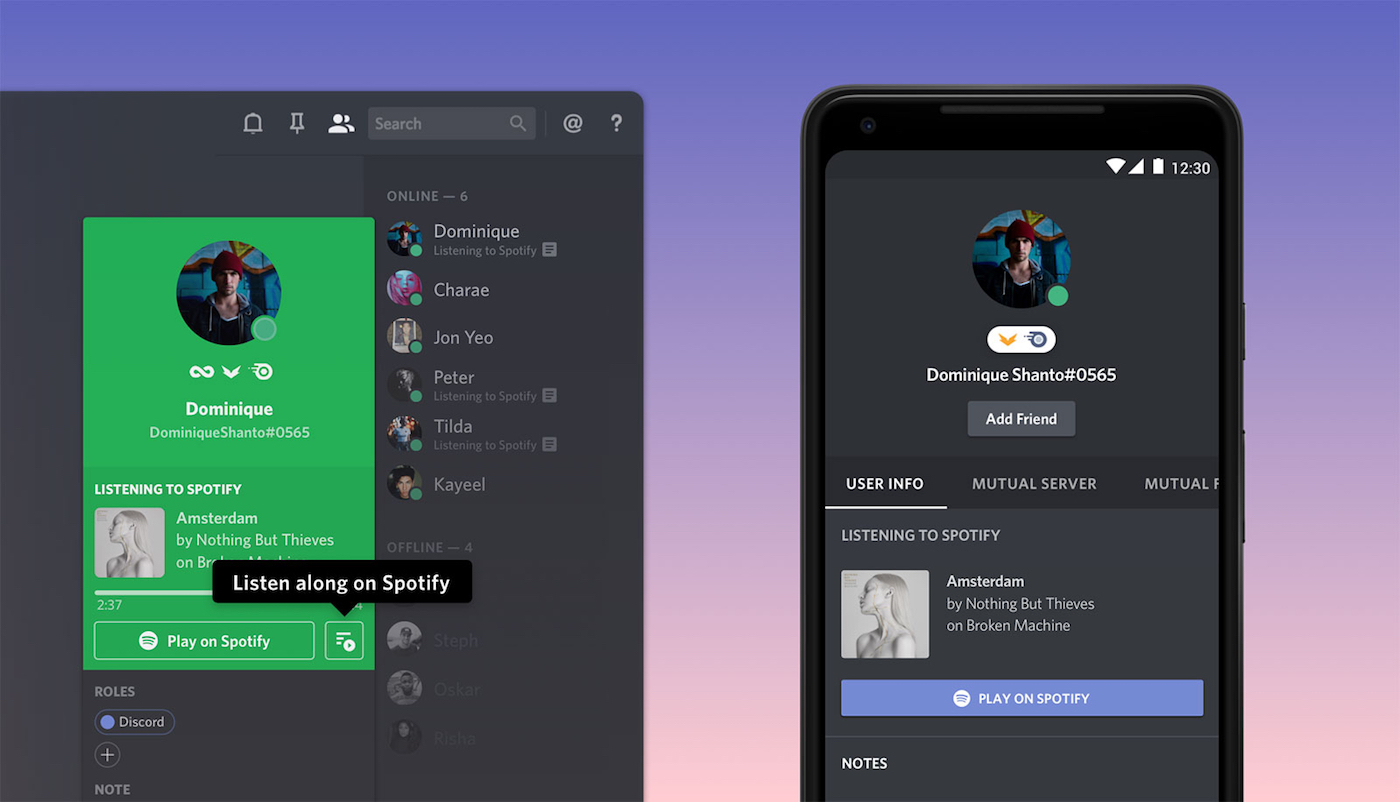

Spotify and gaming chat app Discord are joining forces so your entire channel can bump to the same music during a raid. Starting today, you can link your Spotify Premium account to your Discord account and keep the beats rocking for your entire commu…

Spotify and gaming chat app Discord are joining forces so your entire channel can bump to the same music during a raid. Starting today, you can link your Spotify Premium account to your Discord account and keep the beats rocking for your entire commu…

Alessandro Martini is a Senior Cloud Support Engineer in the AWS Support organization. He likes working with customers, understanding and solving problems, and writing blog posts that outline solutions on multiple AWS products. He also loves pizza, especially when there is no pineapple on it.

Alessandro Martini is a Senior Cloud Support Engineer in the AWS Support organization. He likes working with customers, understanding and solving problems, and writing blog posts that outline solutions on multiple AWS products. He also loves pizza, especially when there is no pineapple on it.

'Superfast' broadband with speeds of at least 24 Mbps is now available across 95 percent of the UK, according to new stats thinkbroadband.com published today. The milestone was actually achieved last month, meaning the government's Broadband Delivery…

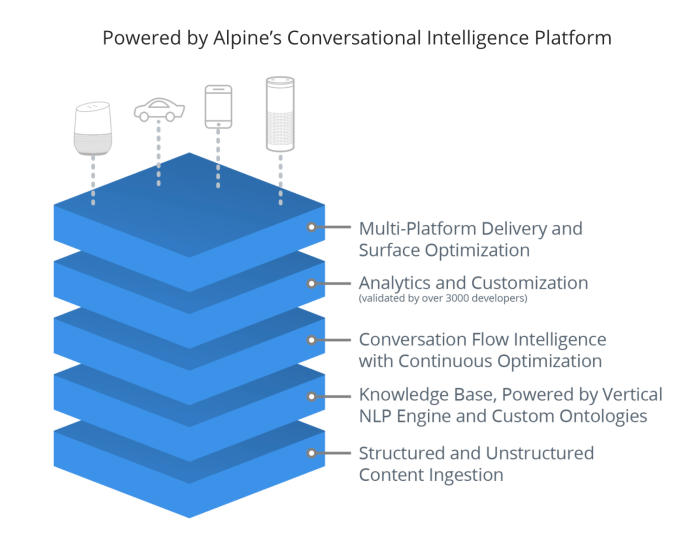

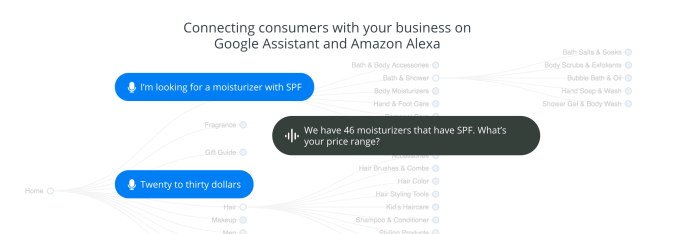

'Superfast' broadband with speeds of at least 24 Mbps is now available across 95 percent of the UK, according to new stats thinkbroadband.com published today. The milestone was actually achieved last month, meaning the government's Broadband Delivery… Voicelabs, a company that has been experimenting in the voice computing market for some time with initiatives in advertising and analytics, is now pivoting its business again – this time, to voice-enabled commerce. The company is today launching its latest product out of stealth: Alpine.AI, a solution that builds voice shopping apps for retailers by importing their catalog, then layering…

Voicelabs, a company that has been experimenting in the voice computing market for some time with initiatives in advertising and analytics, is now pivoting its business again – this time, to voice-enabled commerce. The company is today launching its latest product out of stealth: Alpine.AI, a solution that builds voice shopping apps for retailers by importing their catalog, then layering…

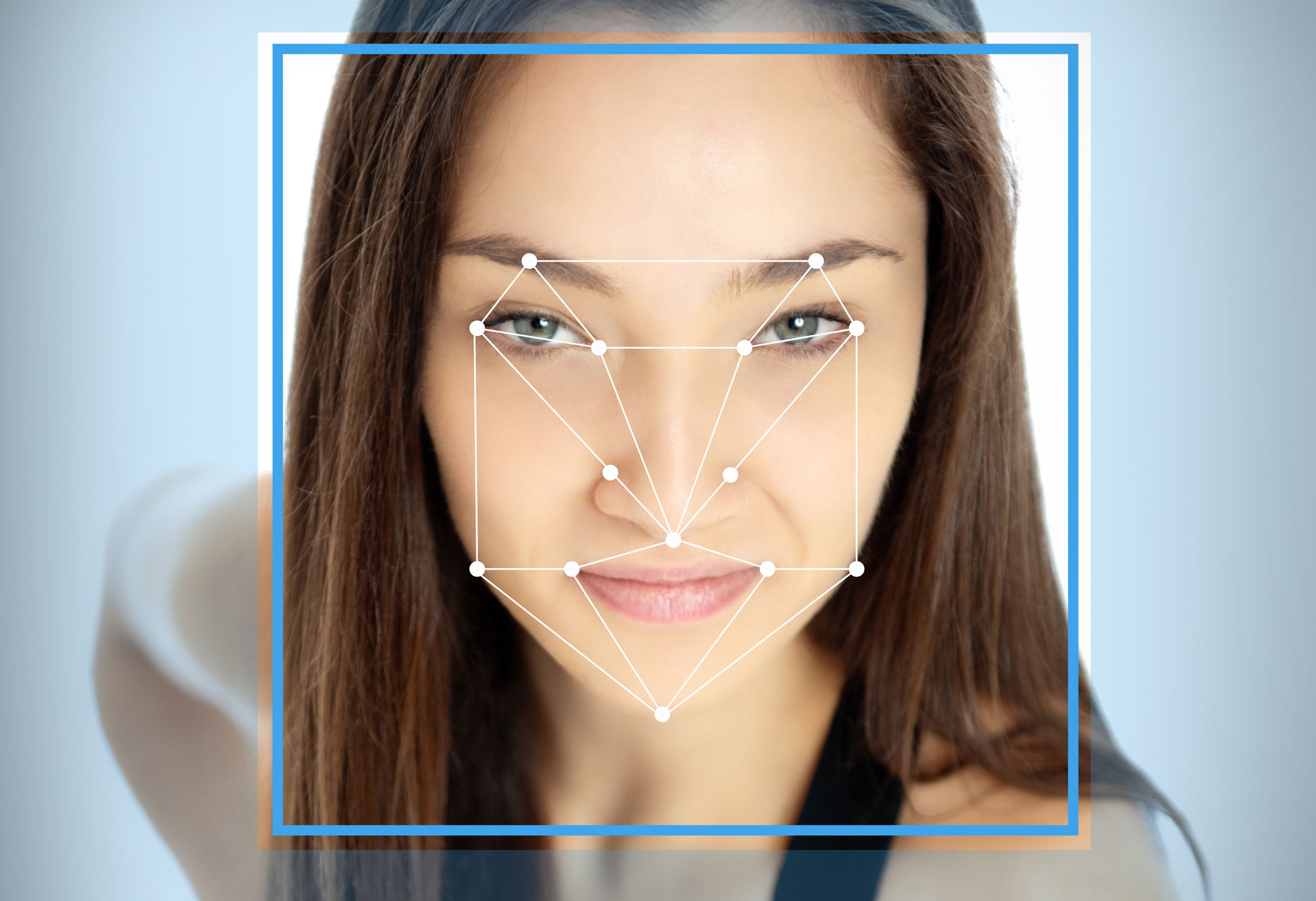

Trueface.ai, the stealthy facial recognition startup that’s backed by 500 Startups and a slew of angel investors, is integrating with IFTTT

Trueface.ai, the stealthy facial recognition startup that’s backed by 500 Startups and a slew of angel investors, is integrating with IFTTT