The content below is taken from the original (New – Amazon EC2 Elastic GPUs for Windows), to continue reading please visit the site. Remember to respect the Author & Copyright.

Today we’re excited to announce the general availability of Amazon EC2 Elastic GPUs for Windows. An Elastic GPU is a GPU resource that you can attach to your Amazon Elastic Compute Cloud (EC2) instance to accelerate the graphics performance of your applications. Elastic GPUs come in medium (1GB), large (2GB), xlarge (4GB), and 2xlarge (8GB) sizes and are lower cost alternatives to using GPU instance types like G3 or G2 (for OpenGL 3.3 applications). You can use Elastic GPUs with many instance types allowing you the flexibility to choose the right compute, memory, and storage balance for your application. Today you can provision elastic GPUs in us-east-1 and us-east-2.

Elastic GPUs start at just $0.05 per hour for an eg1.medium. A nickel an hour. If we attach that Elastic GPU to a t2.medium ($0.065/hour) we pay a total of less than 12 cents per hour for an instance with a GPU. Previously, the cheapest graphical workstation (G2/3 class) cost 76 cents per hour. That’s over an 80% reduction in the price for running certain graphical workloads.

When should I use Elastic GPUs?

Elastic GPUs are best suited for applications that require a small or intermittent amount of additional GPU power for graphics acceleration and support OpenGL. Elastic GPUs support up to and including the OpenGL 3.3 API standards with expanded API support coming soon.

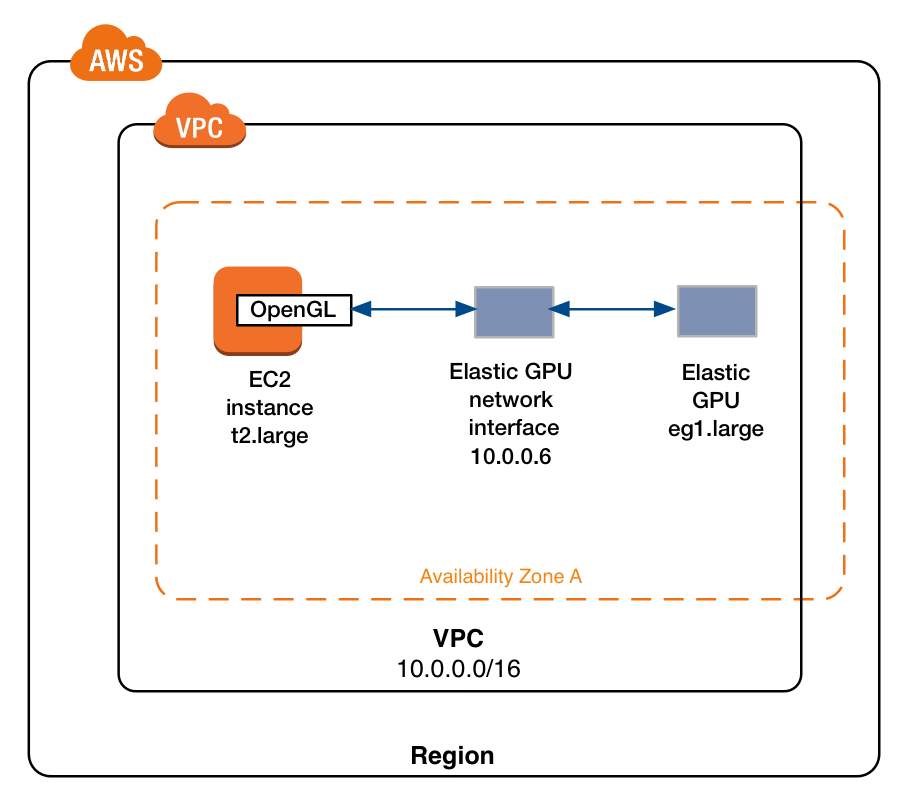

Elastic GPUs are not part of the hardware of your instance. Instead they’re attached through an elastic GPU network interface in your subnet which is created when you launch an instance with an Elastic GPU. The image below shows how Elastic GPUs are attached.

Since Elastic GPUs are network attached it’s important to provision an instance with adequate network bandwidth to support your application. It’s also important to make sure your instance security group allows traffic on port 2007.

Any application that can use the OpenGL APIs can take advantage of Elastic GPUs so Blender, Google Earth, SIEMENS SolidEdge, and more could all run with Elastic GPUs. Even Kerbal Space Program!

Ok, now that we know when to use Elastic GPUs and how they work, let’s launch an instance and use one.

Using Elastic GPUs

First, we’ll navigate to the EC2 console and click Launch Instance. Next we’ll select a Windows AMI like: “Microsoft Windows Server 2016 Base”. Then we’ll select an instance type. Then we’ll make sure we select the “Elastic GPU” section and allocate an eg1.medium (1GB) Elastic GPU.

We’ll also include some userdata in the advanced details section. We’ll write a quick PowerShell script to download and install our Elastic GPU software.

<powershell>

Start-Transcript -Path "C:\egpu_install.log" -Append

(new-object net.webclient).DownloadFile('http://bit.ly/2wRAjuj', 'C:\egpu.msi')

Start-Process "msiexec.exe" -Wait -ArgumentList "/i C:\egpu.msi /qn /L*v C:\egpu_msi_install.log"

[Environment]::SetEnvironmentVariable("Path", $env:Path + ";C:\Program Files\Amazon\EC2ElasticGPUs\manager\", [EnvironmentVariableTarget]::Machine)

Restart-Computer -Force

</powershell>

This software sends all OpenGL API calls to the attached Elastic GPU.

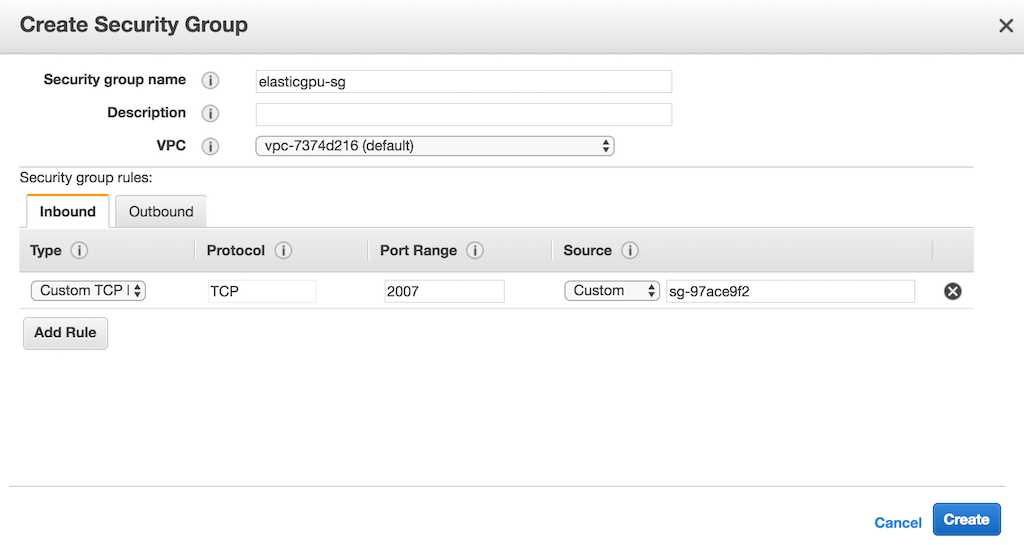

Next, we’ll double check to make sure my security group has TCP port 2007 exposed to my VPC so my Elastic GPU can connect to my instance. Finally, we’ll click launch and wait for my instance and Elastic GPU to provision. The best way to do this is to create a separate SG that you can attach to the instance.

You can see an animation of the launch procedure below.

Alternatively we could have launched on the AWS CLI with a quick call like this:

$aws ec2 run-instances --elastic-gpu-specification Type=eg1.2xlarge \

--image-id ami-1a2b3c4d \

--subnet subnet-11223344 \

--instance-type r4.large \

--security-groups "default" "elasticgpu-sg"

then we could have followed the Elastic GPU software installation instructions here.

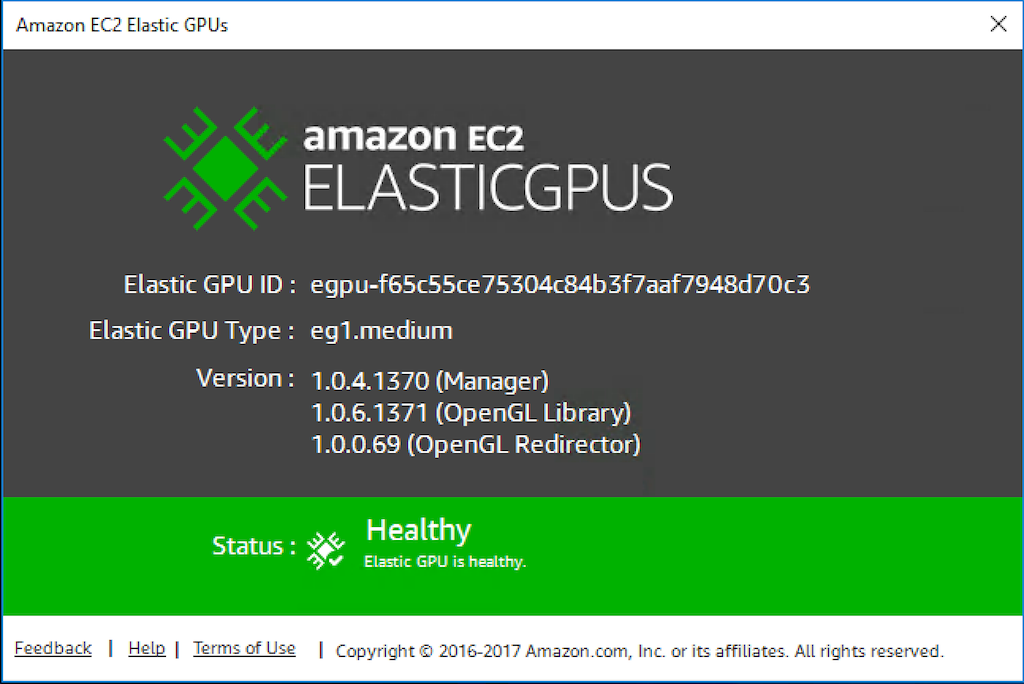

We can now see our Elastic GPU is humming along and attached by checking out the Elastic GPU status in the taskbar.

We welcome any feedback on the service and you can click on the Feedback link in the bottom left corner of the GPU Status Box to let us know about your experience with Elastic GPUs.

Elastic GPU Demonstration

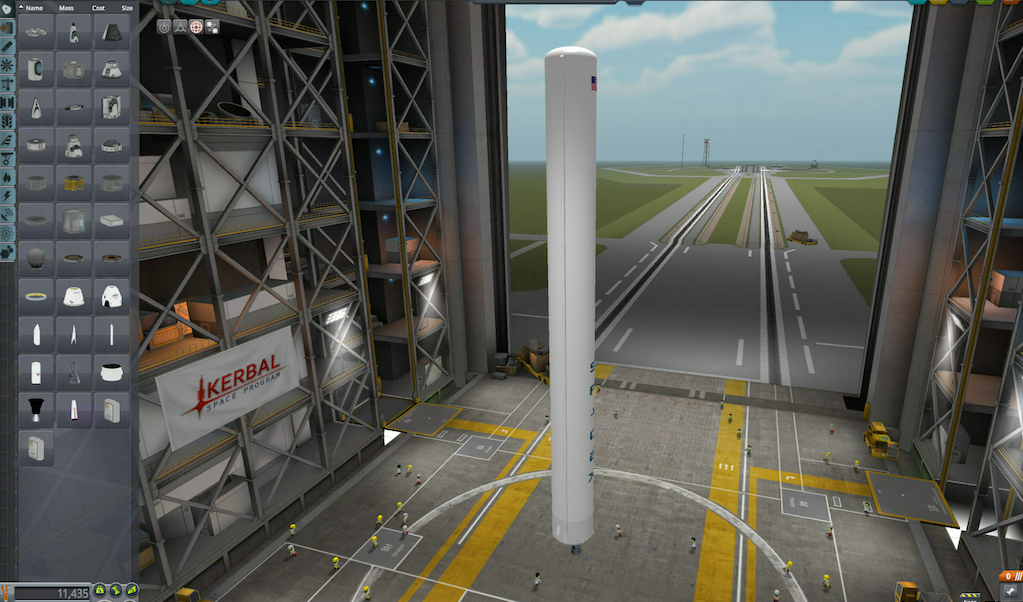

Ok, so we have our instance provisioned and our Elastic GPU attached. My teammates here at AWS wanted me to talk about the amazingly wonderful 3D applications you can run, but when I learned about Elastic GPUs the first thing that came to mind was Kerbal Space Program (KSP), so I’m going to run a quick test with that. After all, if you can’t launch Jebediah Kerman into space then what was the point of all of that software? I’ve downloaded KSP and added the launch parameter of -force-opengl to make sure we’re using OpenGL to do our rendering. Below you can see my poor attempt at building a spaceship – I used to build better ones. It looks pretty smooth considering we’re going over a network with a lossy remote desktop protocol.

I’d show a picture of the rocket launch but I didn’t even make it off the ground before I experienced a rapid unscheduled disassembly of the rocket. Back to the drawing board for me.

In the mean time I can check my Amazon CloudWatch metrics and see how much GPU memory I used during my brief game.

Partners, Pricing, and Documentation

To continue to build out great experiences for our customers, our 3D software partners like ANSYS and Siemens are looking to take advantage of the OpenGL APIs on Elastic GPUs, and are currently certifying Elastic GPUs for their software. You can learn more about our partnerships here.

You can find information on Elastic GPU pricing here. You can find additional documentation here.

Now, if you’ll excuse me I have some virtual rockets to build.

– Randall