The content below is taken from the original ( Cloud storage now more affordable: Announcing general availability of Azure Archive Storage), to continue reading please visit the site. Remember to respect the Author & Copyright.

Today we’re excited to announce the general availability of Archive Blob Storage starting at an industry leading price of $0.002 per gigabyte per month! Last year, we launched Cool Blob Storage to help customers reduce storage costs by tiering their infrequently accessed data to the Cool tier. Organizations can now reduce their storage costs even further by storing their rarely accessed data in the Archive tier. Furthermore, we’re also excited to announce the general availability of Blob-Level Tiering, which enables customers to optimize storage costs by easily managing the lifecycle of their data across these tiers at the object level.

From startups to large organizations, our customers in every industry have experienced exponential growth of their data. A significant amount of this data is rarely accessed but must be stored for a long period of time to meet either business continuity or compliance requirements; think employee data, medical records, customer information, financial records, backups, etc. Additionally, recent and coming advances in artificial intelligence and data analytics are unlocking value from data that might have previously been discarded. Customers in many industries want to keep more of these data sets for a longer period but need a scalable and cost-effective solution to do so.

“We have been working with the Azure team to preview Archive Blob Storage for our cloud archiving service for several months now. I love how easy it is to change the storage tier on an existing object via a single API. This allows us to build Information Lifecycle Management into our application logic directly and use Archive Blob Storage to significantly decrease our total Azure Storage costs.”

-Tom Inglis, Director of Enabling Solutions at BP

Azure Archive Blob Storage

Azure Archive Blob storage is designed to provide organizations with a low cost means of delivering durable, highly available, secure cloud storage for rarely accessed data with flexible latency requirements (on the order of hours). See Azure Blob Storage: Hot, cool, and archive tiers to learn more.

Archive Storage characteristics include:

- Cost-effectiveness: Archive access tier is our lowest priced storage offering for long-term storage which is rarely accessed. Preview pricing will continue through January 2018. For new pricing effective February 1, 2018, see Archive Storage General Availability Pricing.

- Seamless Integration: Customers use the same familiar operations on objects in the Archive tier as on objects in the Hot and Cool access tiers. This will enable customers to easily integrate the new access tier into their applications.

- Durability: All access tiers including Archive are designed to offer the same high durability that customers have come to expect from Azure Storage with the same data replication options available today.

- Security: All data in the Archive access tier is automatically encrypted at rest using 256-bit AES encryption, one of the strongest block ciphers available.

- Global Reach: Archive Storage is available today in 14 regions – North Central US, South Central US, East US, West US, East US 2, Central US, West US 2, West Central US, North Europe, West Europe, Korea Central, Korea South, Central India, and South India.

Blob-Level Tiering: easily optimize storage costs without moving data

To simplify data lifecycle management, we now allow customers to tier their data at the object level. Customers can easily change the access tier of a single object among the Hot, Cool, or Archive tiers as usage patterns change, without having to move data between accounts. Blobs in all three access tiers can co-exist within the same account.

Flexible management

Archive Storage and Blob-Level Tiering are available on both new and existing Blob Storage and General Purpose v2 (GPv2) accounts. GPv2 accounts are a new account type that support all our latest features, while offering support for Block Blobs, Page Blobs, Files, Queues, and Tables. Customers with General Purpose v1 (GPv1) accounts can easily convert their accounts to a General Purpose v2 account through a simple 1-click step (Blob Storage account conversion support coming soon). GPv2 accounts have a different pricing model than GPv1 accounts, and customers should review it prior to using GPv2 as it may change their bill. See Azure Storage Options to learn more about GPv2, including how and when to use it.

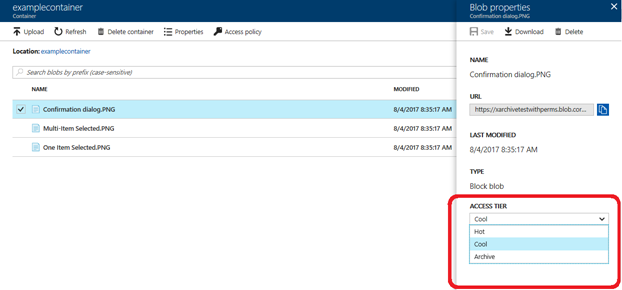

A user may access Archive and Blob-Level Tiering via the Azure portal (Figure 1), PowerShell, and CLI tools and REST APIs, .NET (Figure 2), Java, Python, or Node.js client libraries.

Figure 1: Set blob access tier in portal

CloudBlockBlob blob = (CloudBlockBlob)items;

blob.SetStandardBlobTier(StandardBlobTier.Archive);

Figure 2: Set blob access tier using .NET client library

Partner Integration

We integrate with a broad ecosystem of partners to jointly deliver solutions to our customers. The following partners support Archive Storage:

Commvault’s Windows/Azure Centric software solution enables a single solution for storage-agnostic, heterogeneous enterprise data management. Commvault’s native support for Azure, including being one of the first Windows/ISV to be "Azure Certified" has been a key benefit for customers considering a Digital Transformation to Azure. Commvault remains committed to continuing our integration and compatibility efforts with Microsoft, befitting a close relationship between the companies that has existed for over 17 years. This includes quick, cost effective and efficient movement of data to Azure while enabling indexing such that our customers can proactively use the data we send to Azure, including "Azure Archive". With this new Archive Storage offering, Microsoft again makes significant enhancements to their Azure offering and we expect that this service will be an important driver of new and expanding opportunities for both Commvault and Microsoft.

Commvault’s Windows/Azure Centric software solution enables a single solution for storage-agnostic, heterogeneous enterprise data management. Commvault’s native support for Azure, including being one of the first Windows/ISV to be "Azure Certified" has been a key benefit for customers considering a Digital Transformation to Azure. Commvault remains committed to continuing our integration and compatibility efforts with Microsoft, befitting a close relationship between the companies that has existed for over 17 years. This includes quick, cost effective and efficient movement of data to Azure while enabling indexing such that our customers can proactively use the data we send to Azure, including "Azure Archive". With this new Archive Storage offering, Microsoft again makes significant enhancements to their Azure offering and we expect that this service will be an important driver of new and expanding opportunities for both Commvault and Microsoft.

NetApp® AltaVaultTM cloud-integrated storage enables customers to tap into cloud economics and securely backup data to Microsoft Azure cloud storage at up to 90% lower cost compared with on-premises solutions. AltaVault’s modern storage architecture optimizes data using class-leading deduplication, compression, and encryption. Optimized data is written to Azure blob storage, reducing WAN bandwidth requirements and ensuring maximum data security. By adding Day 1 support for Azure Archive storage, AltaVault provides organizations access to the most cost-effective Azure blob storage tier, significantly driving down costs for rarely accessed long term backup and archive datasets. Try AltaVault’s free 90-day trial and see how easy it is to leverage Microsoft Azure Archive cloud storage today.

NetApp® AltaVaultTM cloud-integrated storage enables customers to tap into cloud economics and securely backup data to Microsoft Azure cloud storage at up to 90% lower cost compared with on-premises solutions. AltaVault’s modern storage architecture optimizes data using class-leading deduplication, compression, and encryption. Optimized data is written to Azure blob storage, reducing WAN bandwidth requirements and ensuring maximum data security. By adding Day 1 support for Azure Archive storage, AltaVault provides organizations access to the most cost-effective Azure blob storage tier, significantly driving down costs for rarely accessed long term backup and archive datasets. Try AltaVault’s free 90-day trial and see how easy it is to leverage Microsoft Azure Archive cloud storage today.

HubStor is a cloud archiving platform that converges long-term retention and data protection for on-premises file servers, Office 365, email, and other sources of unstructured data content. Delivered as Software-as-a-Service (SaaS) exclusively on the Azure cloud platform, IT teams are adopting HubStor to understand, secure, and manage large volumes of data in Azure with policies for classification, indexing, WORM retention, deletion, and tiering. As detailed in this post, customers can now apply HubStor’s built-in file analytics and storage tiering policies with the new Azure Archive Blob Storage tier to place the right data on the optimal tier at the best time in the information lifecycle. Enterprise Strategy Group recently completed a lab validation report on HubStor which you can download here.

HubStor is a cloud archiving platform that converges long-term retention and data protection for on-premises file servers, Office 365, email, and other sources of unstructured data content. Delivered as Software-as-a-Service (SaaS) exclusively on the Azure cloud platform, IT teams are adopting HubStor to understand, secure, and manage large volumes of data in Azure with policies for classification, indexing, WORM retention, deletion, and tiering. As detailed in this post, customers can now apply HubStor’s built-in file analytics and storage tiering policies with the new Azure Archive Blob Storage tier to place the right data on the optimal tier at the best time in the information lifecycle. Enterprise Strategy Group recently completed a lab validation report on HubStor which you can download here.

The purpose of CloudBerry Backup for Microsoft Azure is automating data upload to Microsoft Azure cloud storage. It is able to compress and encrypt the data with a user-defined password prior to the upload. It then securely transfers it to the cloud either on schedule or in real time. CloudBerry Backup also comes with file-system and image-based backup, SQL Server and MS Exchange support, as well as flexible retention policies and incremental backup. CloudBerry Backup now supports Microsoft Azure Archive Blob Storage for storing backup and archival data.

The purpose of CloudBerry Backup for Microsoft Azure is automating data upload to Microsoft Azure cloud storage. It is able to compress and encrypt the data with a user-defined password prior to the upload. It then securely transfers it to the cloud either on schedule or in real time. CloudBerry Backup also comes with file-system and image-based backup, SQL Server and MS Exchange support, as well as flexible retention policies and incremental backup. CloudBerry Backup now supports Microsoft Azure Archive Blob Storage for storing backup and archival data.

Archive2Azure, the intelligent data management and compliance archiving solution, provides customers a native Azure archiving application. Archive2Azure enables companies to provide automated retention, indexing on demand, encryption, search, review, and production for long term archiving of their compliance, active, low-touch, and inactive data from within their own Azure tenancy. This pairing of the Azure Cloud with Archive2Azure’s archiving and data management capabilities provides companies with the cloud-based security and information management they have long sought. With the general availability of Azure’s much anticipated Archive Storage offering, the needed security and lower cost to archive and manage data for extended periods is now possible. With the availability of the new Archive Storage offering, Archive2Azure can now offer Azure’s full range of storage tiers providing users a wide choice of storage performance and cost.

Archive2Azure, the intelligent data management and compliance archiving solution, provides customers a native Azure archiving application. Archive2Azure enables companies to provide automated retention, indexing on demand, encryption, search, review, and production for long term archiving of their compliance, active, low-touch, and inactive data from within their own Azure tenancy. This pairing of the Azure Cloud with Archive2Azure’s archiving and data management capabilities provides companies with the cloud-based security and information management they have long sought. With the general availability of Azure’s much anticipated Archive Storage offering, the needed security and lower cost to archive and manage data for extended periods is now possible. With the availability of the new Archive Storage offering, Archive2Azure can now offer Azure’s full range of storage tiers providing users a wide choice of storage performance and cost.

[Archive support coming soon] Cohesity delivers the world’s first hyper-converged storage system for enterprise data. Cohesity consolidates fragmented, inefficient islands of secondary storage into an infinitely expandable and limitless storage platform that can run both on-premises and in the public cloud. Designed with the latest web-scale distributed systems technology, Cohesity radically simplifies existing backup, file shares, object, and dev/test storage silos by creating a unified, instantly-accessible storage pool. The Cohesity platform will support Azure Archive Storage for the following customer use cases: (i) long-term data retention for infrequently accessed data that require cost effective lowest priced blob storage, (ii) blob-level tiering functionality among Hot, Cool and Archive tiers, and (iii) ease of recovery of data from cloud back to on-premise independent of which Azure blob tier the data is in. Note that Azure Blob storage can be easily registered and assigned via Cohesity’s policy-based administration portal to any data protection workload running on the Cohesity platform.

[Archive support coming soon] Cohesity delivers the world’s first hyper-converged storage system for enterprise data. Cohesity consolidates fragmented, inefficient islands of secondary storage into an infinitely expandable and limitless storage platform that can run both on-premises and in the public cloud. Designed with the latest web-scale distributed systems technology, Cohesity radically simplifies existing backup, file shares, object, and dev/test storage silos by creating a unified, instantly-accessible storage pool. The Cohesity platform will support Azure Archive Storage for the following customer use cases: (i) long-term data retention for infrequently accessed data that require cost effective lowest priced blob storage, (ii) blob-level tiering functionality among Hot, Cool and Archive tiers, and (iii) ease of recovery of data from cloud back to on-premise independent of which Azure blob tier the data is in. Note that Azure Blob storage can be easily registered and assigned via Cohesity’s policy-based administration portal to any data protection workload running on the Cohesity platform.

Igneous Systems delivers the industry’s first secondary storage system built to handle massive file systems. Offered as-a-Service and built using a cloud-native architecture, Igneous Hybrid Storage Cloud provides a modern, scalable approach to management of unstructured file data across datacenters and public cloud, without the need to manage infrastructure. Igneous supports backup and long-term archiving of unstructured file data to Azure Archive Blob Storage, enabling organizations to replace legacy backup software and targets with a hybrid cloud approach.

Igneous Systems delivers the industry’s first secondary storage system built to handle massive file systems. Offered as-a-Service and built using a cloud-native architecture, Igneous Hybrid Storage Cloud provides a modern, scalable approach to management of unstructured file data across datacenters and public cloud, without the need to manage infrastructure. Igneous supports backup and long-term archiving of unstructured file data to Azure Archive Blob Storage, enabling organizations to replace legacy backup software and targets with a hybrid cloud approach.

[Archive support coming soon] Rubrik orchestrates all critical data management services – data protection, search, development, and analytics – on one platform across all your Microsoft applications. By adding integration with Microsoft Azure Archive Storage Tier, Rubrik will complete support for all storage classes of Azure. With Rubrik, enterprises can now automate SLA compliance to any class in Azure with one policy engine and manage all archival locations in a single consumer-grade interface to meet regulatory and legal requirements. Leverage a rich suite of API services to create custom lifecycle management workflows across on-prem to Azure. Rubrik Cloud Data Management was architected from the beginning to deliver cloud archival services with policy-driven intelligence. Rubrik has achieved Gold Cloud Platform competency and offers end-to-end coverage of Microsoft technologies and services (physical or virtualized Windows, SQL, Hyper-V, Azure Stack, and Azure).

[Archive support coming soon] Rubrik orchestrates all critical data management services – data protection, search, development, and analytics – on one platform across all your Microsoft applications. By adding integration with Microsoft Azure Archive Storage Tier, Rubrik will complete support for all storage classes of Azure. With Rubrik, enterprises can now automate SLA compliance to any class in Azure with one policy engine and manage all archival locations in a single consumer-grade interface to meet regulatory and legal requirements. Leverage a rich suite of API services to create custom lifecycle management workflows across on-prem to Azure. Rubrik Cloud Data Management was architected from the beginning to deliver cloud archival services with policy-driven intelligence. Rubrik has achieved Gold Cloud Platform competency and offers end-to-end coverage of Microsoft technologies and services (physical or virtualized Windows, SQL, Hyper-V, Azure Stack, and Azure).