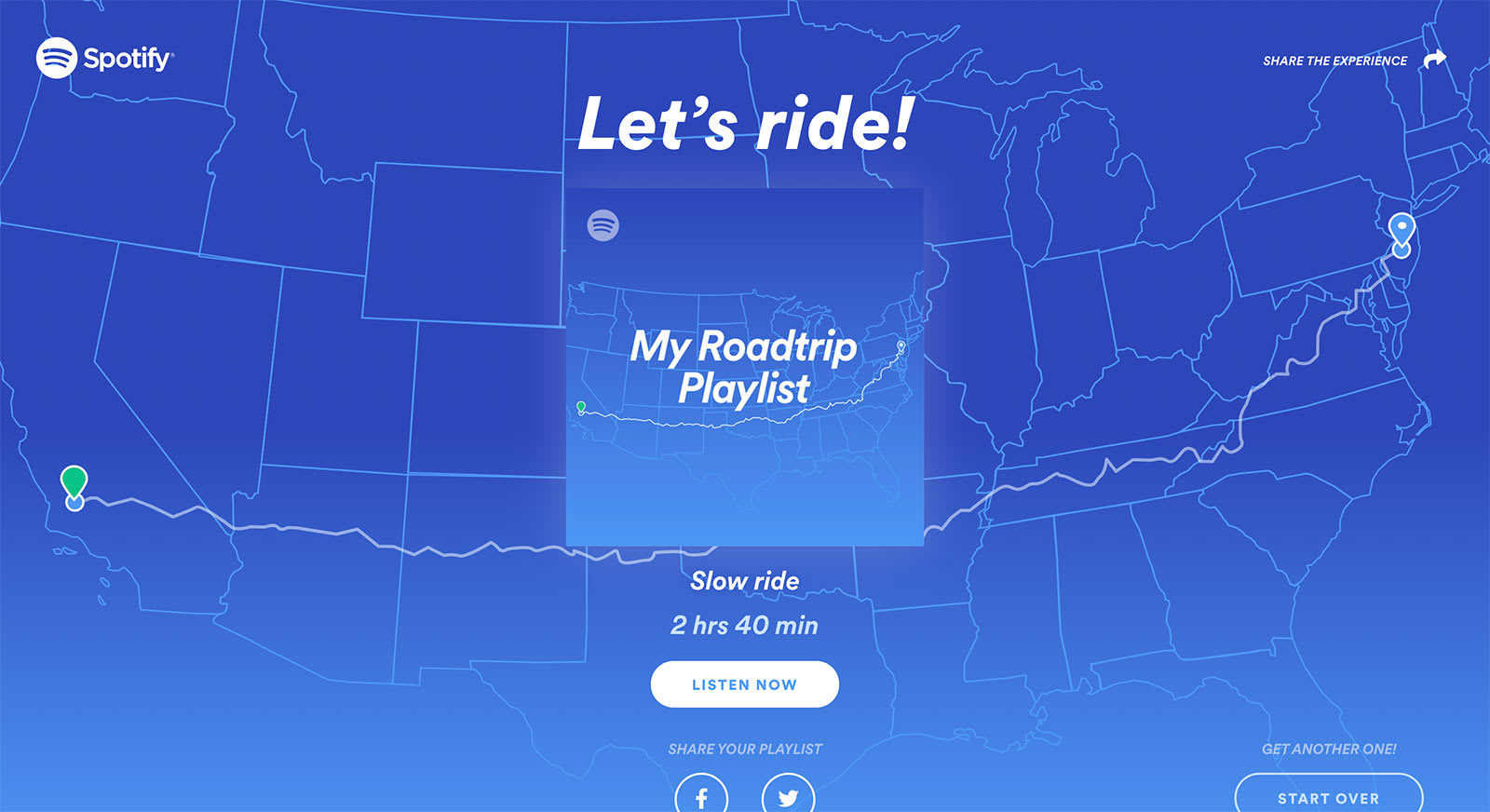

The content below is taken from the original ( Spotify’s latest feature creates a playlist for your road trip), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Spotify’s latest feature creates a playlist for your road trip), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Cray to license Fujitsu Arm processor for supercomputers), to continue reading please visit the site. Remember to respect the Author & Copyright.

Cray says it will be the first supercomputer vendor to license Fujitsu’s A64FX Arm-based processor with high-bandwidth memory (HBM) for exascale computing.

Under the agreement, Cray – now a part of HPE – is developing the first-ever commercial supercomputer powered by the A64FX processor, with initial customers being the usual suspects in HPC: Los Alamos National Laboratory, Oak Ridge National Laboratory, RIKEN, Stony Brook University, and University of Bristol.

As part of this new partnership, Cray and Fujitsu will explore engineering collaboration, co-development, and joint go-to-market to meet customer demand in the supercomputing space. Cray will also bring its Cray Programming Environment (CPE) for Arm processors over to the A64FX to optimize applications and take full advantage of SVE and HBM2.

The content below is taken from the original ( New Automation Features In AWS Systems Manager), to continue reading please visit the site. Remember to respect the Author & Copyright.

Today we are announcing additional automation features inside of AWS Systems Manager. If you haven’t used Systems Manager yet, it’s a service that provides a unified user interface so you can view operational data from multiple AWS services and allows you to automate operational tasks across your AWS resources.

With this new release, it just got even more powerful. We have added additional capabilities to AWS Systems Manager that enables you to build, run, and share automations with others on your team or inside your organisation — making managing your infrastructure more repeatable and less error-prone.

Inside the AWS Systems Manager console on the navigation menu, there is an item called Automation if I click this menu item I will see the Execute automation button.

When I click on this I am asked what document I want to run. AWS provides a library of documents that I could choose from, however today, I am going to build my own so I will click on the Create document button.

This takes me to a a new screen that allows me to create a document (sometimes referred to as an automation playbook) that amongst other things executes Python or PowerShell scripts.

The console gives me two options for editing a document: A YAML editor or the “Builder” tool that provides a guided, step-by-step user interface with the ability to include documentation for each workflow step.

So, let’s take a look by building and running a simple automation. When I create a document using the Builder tool, the first thing required is a document name.

Next, I need to provide a description. As you can see below, I’m able to use Markdown to format the description. The description is an excellent opportunity to describe what your document does, this is valuable since most users will want to share these documents with others on their team and build a library of documents to solve everyday problems.

Optionally, I am asked to provide parameters for my document. These parameters can be used in all of the scripts that you will create later. In my example, I have created three parameters: imageId, tagValue, and instanceType. When I come to execute this document, I will have the opportunity to provide values for these parameters that will override any defaults that I set.

When someone executes my document, the scripts that are executed will interact with AWS services. A document runs with the user permissions for most of its actions along with the option of providing an Assume Role. However, for documents with the Run a Script action, the role is required when the script is calling any AWS API.

You can set the Assume role globally in the builder tool; however, I like to add a parameter called assumeRole to my document, this gives anyone that is executing it the ability to provide a different one.

You then wire this parameter up to the global assumeRole by using the syntax in the Assume role property textbox (I have called my parameter name assumeRole but you could call it what you like, just make sure that the name you give the parameter is what you put in the double parentheses syntax e.g.).

Once my document is set up, I then need to create the first step of my document. Your document can contain 1 or more steps, and you can create sophisticated workflows with branching, for example based on a parameter or failure of a step. Still, in this example, I am going to create three steps that execute one after another. Again you need to give the step a name and a description. This description can also include markdown. You need to select an Action Type, for this example I will choose Run a script.

With the ‘Run a script’ action type, I get to run a script in Python or PowerShell without requiring any infrastructure to run the script. It’s important to realise that this script will not be running on one of your EC2 instances. The scripts run in a managed compute environment. You can configure a Amazon CloudWatch log group on the preferences page to send outputs to a CloudWatch log group of your choice.

In this demo, I write some Python that creates an EC2 instance. You will notice that this script is using the AWS SDK for Python. I create an instance based upon an image_id, tag_value, and instance_type that are passed in as parameters to the script.

To pass parameters into the script, in the Additional Inputs section, I select InputPayload as the input type. I then use a particular YAML format in the Input Value text box to wire up the global parameters to the parameters that I am going to use in the script. You will notice that again I have used the double parentheses syntax to reference the global parameters e.g.

In the Outputs section, I also wire up an output parameter than can be used by subsequent steps.

Next, I will add a second step to my document . This time I will poll the instance to see if its status has switched to ok. The exciting thing about this code is the InstanceId, is passed into the script from a previous step. This is an example of how the execution steps can be chained together to use outputs of earlier steps.

def poll_instance(events, context):

import boto3

import time

ec2 = boto3.client('ec2')

instance_id = events['InstanceId']

print('[INFO] Waiting for instance to enter Status: Ok', instance_id)

instance_status = "null"

while True:

res = ec2.describe_instance_status(InstanceIds=[instance_id])

if len(res['InstanceStatuses']) == 0:

print("Instance Status Info is not available yet")

time.sleep(5)

continue

instance_status = res['InstanceStatuses'][0]['InstanceStatus']['Status']

print('[INFO] Polling get status of the instance', instance_status)

if instance_status == 'ok':

break

time.sleep(10)

return {'Status': instance_status, 'InstanceId': instance_id}To pass the parameters into the second step, notice that I use the double parentheses syntax to reference the output of a previous step. The value in the Input value textbox is the name of the step launchEc2Instance and then the name of the output parameter payload.

Lastly, I will add a final step. This step will run a PowerShell script and use the AWS Tools for PowerShell. I’ve added this step purely to show that you can use PowerShell as an alternative to Python.

You will note on the first line that I have to Install the AWSPowerShell.NetCore module and use the -Force switch before I can start interacting with AWS services.

All this step does is take the InstanceId output from the LaunchEc2Instance step and use it to return the InstanceType of the ECS instance.

It’s important to note that I have to pass the parameters from LaunchEc2Instance step to this step by configuring the Additional inputs in the same way I did earlier.

Now that our document is created we can execute it. I go to the Actions & Change section of the menu and select Automation, from this screen, I click on the Execute automation button. I then get to choose the document I want to execute. Since this is a document I created, I can find it on the Owned by me tab.

If I click the LaunchInstance document that I created earlier, I get a document details screen that shows me the description I added. This nicely formatted description allows me to generate documentation for my document and enable others to understand what it is trying to achieve.

When I click Next, I am asked to provide any Input parameters for my document. I add the imageId and ARN for the role that I want to use when executing this automation. It’s important to remember that this role will need to have permissions to call any of the services that are requested by the scripts. In my example, that means it needs to be able to create EC2 instances.

Once the document executes, I am taken to a screen that shows the steps of the document and gives me details about how long each step took and respective success or failure of each step. I can also drill down into each step and examine the logs. As you can see, all three steps of my document completed successfully, and if I go to the Amazon Elastic Compute Cloud (EC2) console, I will now have an EC2 instance that I created with tag LaunchedBySsmAutomation.

These new features can be found today in all regions inside the AWS Systems Manager console so you can start using them straight away.

Happy Automating!

— Martin;

The content below is taken from the original ( 4 steps to a successful cloud migration), to continue reading please visit the site. Remember to respect the Author & Copyright.

Digital transformation and migration to the cloud are top priorities for a lot of enterprises. At Google Cloud, we’re working hard to make this journey easier. For example, we recently launched Migrate for Compute Engine and Migrate for Anthos to simplify cloud migration and modernization. These services have helped customers like Cardinal Health perform successful, large-scale migrations to GCP.

But we understand that the migration journey can be daunting. To make things easier, we developed a whitepaper on application migration featuring investigative processes and advice to help you design an effective migration and modernization strategy. This guide outlines the four basic steps you need to follow to migrate successfully and efficiently:

There’s a lot to consider when you start thinking about digital transformation, and every cloud modernization project has its nuances and unique considerations. The secret to success is understanding the advantages and disadvantages of the options at your disposal, and weighing them against what you want to transform and why. To learn how to migrate and modernize your applications with Google Cloud, download this whitepaper.

The content below is taken from the original ( 100 Google products that were deprecated or abandoned), to continue reading please visit the site. Remember to respect the Author & Copyright.

Google launches several products and services every and discontinues many which don’t do well. While we are familiar with the ones that succeed, most of the failed ones are consigned to the dustbins of history. Here is a list of […]

Google launches several products and services every and discontinues many which don’t do well. While we are familiar with the ones that succeed, most of the failed ones are consigned to the dustbins of history. Here is a list of […]

This post 100 Google products that were deprecated or abandoned is from TheWindowsClub.com.

The content below is taken from the original ( ThousandEyes Annual Research Report Reveals Notable Performance Variations Between AWS, GCP, Azure, Alibaba and IBM Cloud), to continue reading please visit the site. Remember to respect the Author & Copyright.

ThousandEyes, the Internet and Cloud Intelligence company, announced the findings of its second annual Cloud Performance Benchmark, the only research Read more at VMblog.com.

Time passes but memories never fade… A minute to remember!

The content below is taken from the original ( Have you been naughty, or have you been really naughty? Microsoft 365 users to get their very own Compliance Score), to continue reading please visit the site. Remember to respect the Author & Copyright.

Ignite Got governance? Microsoft reckons there is room for improvement – it should know – and has used its Ignite Florida knees-up to batter compliance with its overused AI stick.…

The content below is taken from the original ( This $387 Azure certification prep bundle is currently on sale for $29), to continue reading please visit the site. Remember to respect the Author & Copyright.

Modern tech companies require more computing power than ever before, so many of them are turning to cloud services like Microsoft Azure to meet their needs. As such, becoming cloud-certified is a necessity if you want to pursue today’s highest-paying IT jobs. With this 4-course bundle, you can become an Azure master for just $29.

The content below is taken from the original ( State of the Brainz: 2019 MetaBrainz Summit highlights), to continue reading please visit the site. Remember to respect the Author & Copyright.

The 2019 MetaBrainz Summit took place on 27th–29th of September 2019 in Barcelona, Spain at the MetaBrainz HQ. The Summit is a chance for MetaBrainz staff and the community to gather and plan ahead for the next year. This report is a recap of what was discussed and what lies ahead for the community.

The Summits are a once-annual gathering of MetaBrainz staff and contributors working on key project areas. This year, the Summit was attended by the following folks:

The Summit took place at the MetaBrainz HQ in Barcelona, Spain. Over the course of one weekend, the team and community took on an ambitious agenda of tasks and discussions:

This blog post won’t summarize all the discussion above, but it will try to emphasize some of the more interesting discussions. (For the full details, peek at the Summit 2019 notes document or the archived live stream.)

The Summit began with a quick recap from all different project teams. The notes from the Summit are included below based on the project and who presented on it. Click to navigate to what you want to stay informed on:

Engineering efforts can be divided into two sub-categories: Code and Infrastructure.

Future steps for code are focused around genres, user script engagement, API key design, and an editing system overhaul.

Genres are an eternal topic across the MetaBrainz Summits, but now, they finally exist and are implemented in the front-end and back-end. There was some talk about whether MusicBrainz should be deciding what is or is not a genre; some UPF MTG datasets (ISMIR2004 Genre dataset, MediaEval AcousticBrainz Genre) were mentioned, which collect genres and map them in some way to MBIDs. Aliases for genres are also planned, but there are still some edge cases to consider, like whether an alias could apply to two genres.

User script engagement comes from MetaBrainz wanting a better integration of MusicBrainz with popular user scripts. The team recognized the usefulness of user scripts to create popular tweaks and changes to the viewing or editing experience of MusicBrainz data. Not only do they help other users, but it also gives the development team feedback of popular features to add into MusicBrainz properly. The concerns highlighted here were around scripts that perform mass edits across multiple releases; with great power, comes greater responsibility if implementing something like this in the database. The team did not come to a closing resolution on how to make this a better collaboration. If you are a member of the user script community, we’d love to hear your thoughts on this in Discourse.

API key design comes from MetaBrainz wishing to have greater understanding who uses our data and how, particularly around the Live Data Feed. The intended approach is to use mandatory API keys for end-users to authenticate with. Part of this is an authentication system question (considering an OAuth2 migration) and how to handle dates and times in the API. Monkey was actioned to formalize a proposal at a later date for moving this conversation forward.

An editing system overhaul was one of the most ambitious discussions held at the Summit. The need for an overhaul comes from challenges around scaling and growing the database. It also comes from a discussion about isolating the editing system of MusicBrainz from the actual project; for example, this could enable BookBrainz to use the same editor “code” as MusicBrainz, while allowing each to take a different form for their types of data. A git‐like editing system was proposed and some early architecture research was completed by the end of the Summit.

Future steps and projects for infrastructure were around Docker/container orchestration and indexed search.

There are two components to how MetaBrainz uses Docker and containers: for local development and production deployment. Local development helps developers run a project on their own hardware and test their changes. Production deployment concerns how containers are deployed in an infrastructure environment. The infrastructure team hopes to standardize on Docker best practices for development. More consistency across projects helps existing contributors jump into new areas or other projects more easily. Another part of this conversation was around container orchestration with Kubernetes or OpenShift, but more research is needed to understand the cost-value of doing this work. There is limited bandwidth for the infrastructure team to take on new projects and meet current demands.

YvanZo started the conversation on indexed search by reviewing the Solr set-up documentation. The discussion moved to the Search Index Rebuilder (SIR) and pending work to improve performance and resource load. More notes from the discussion are available.

The community updates were divided into three pieces: community engagement, documentation roadmap, and Google Code-In.

The community engagement discussion started with a map of the MetaBrainz community by Justin W. Flory:

Three different initiatives were suggested as ways to enable existing and new contributors in a variety of ways, not all code-related:

The community index is a “point of entry” for anyone who wants to get involved with a MetaBrainz project. The flowchart above describes the different ways to get involved, but there is not a good pathway for someone to get directed to the best way they can help. An example of what we want to create is similar to whatcanidoformozilla.org or whatcanidoforfedora.org. This is still a discussion in progress, but if you are interested, please vote and/or comment on JIRA ticket ORG-36.

The MetaBrainz community is deeply rooted in IRC, but IRC is not an easy-to-use tool for everyone. Some folks use IRCCloud as a way to get around this, but for new contributors, IRC is not an accessible platform. One way to get around this is with chat bridges. At the Summit, we committed to exploring a chat bridge, possibly between our IRC channels and Telegram groups. An IRC channel would be linked to a Telegram group, so messages in one appear in the other. This is one social experiment we want to try for bringing new people into the MetaBrainz community. Future progress is waiting on an upstream feature request to be implemented.

An advocacy program aims to empower the community to present MetaBrainz projects, and their own works linked to MetaBrainz, at various events around the world. This follows models set by other larger community projects that implement a community ambassador or advocacy program. More discussion is needed, but if you are interested in this, please vote and/or comment on JIRA ticket ORG-24.

How do we make our documentation more useful and practical? See this spin-off discussion in the forums for more information. To keep up with future changes, please vote and/or comment on JIRA ticket OTHER-350.

Google Code-in was led by Freso. We quickly jumped to discussions of how to start planning for participation this year. Fortunately, we are able to use last year’s tasks as a base for writing new tasks this year. We still need help with mentorship and planning, so if you want to get involved with that, please reach out via the usual channels.

This event would not have been possible without the support of Microsoft. Thank you for supporting the engagements and interactions that support this project in the best possible way.

Thanks everyone who travelled from near and far (and those who couldn’t be there together with us), brought lots of yummy chocolates, danced Bollywood style during breaks, and helped contribute towards a more Free world of music and technology.

The content below is taken from the original ( New Microsoft Windows Performance Monitor), to continue reading please visit the site. Remember to respect the Author & Copyright.

As part of the new Windows Admin Center, we have a new Microsoft Windows Performance Monitor tool that refreshes a decades-old component

The post New Microsoft Windows Performance Monitor appeared first on ServeTheHome.

The content below is taken from the original ( Surface Pro X teardown reveals one of the most repairable tablets ever), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Strava artist creates incredible work to mark anniversary of fall of Berlin Wall), to continue reading please visit the site. Remember to respect the Author & Copyright.

A Strava artist has created an incredible piece to mark the anniversary of the fall of the Berlin Wall.

The content below is taken from the original ( Prepare for the CompTIA certification exams with this $69 training bundle), to continue reading please visit the site. Remember to respect the Author & Copyright.

No modern tech company can operate without a capable IT department, and as new companies launch each year, the demand for IT professionals will continue to grow. However, IT professionals must earn their certifications first, and CompTIA certifications are among the most versatile because they’re vendor-neutral. If you’re interested in earning a CompTIA certification, you can prepare with this $69 training bundle.

The content below is taken from the original ( Is there a way to check if a new BitLocker key has been escrowed to AD?), to continue reading please visit the site. Remember to respect the Author & Copyright.

submitted by /u/MiggeldyMackDaddy to r/PowerShell

[link] [comments]

The content below is taken from the original ( Admin Insider: What’s new in Chrome Enterprise, Release 78), to continue reading please visit the site. Remember to respect the Author & Copyright.

In our latest release, Chrome 78 brings features to help increase user productivity, and improve policy management for Admins. Here’s what to expect (and as always, for the full list of new features, be sure to read the release notes).

Using Virtual Desks to increase productivity and reduce clutter

Starting in Chrome 78, users will be able to create up to four separate work spaces using Virtual Desks. Think of Virtual Desks as separate workspaces within your Chromebook. Use this feature to create helpful boundaries between projects or activities. This makes it easier to multitask and stay organized.

To enable Virtual Desks, people can tap the overview key on the top of the keyboard or swipe down on the keypad using three fingers; “+ New desk” will appear in the top right hand corner. Read more about Virtual Desks and other updates.

Linux Beta experience enhancements

With Chromebooks, you can install your favorite developer tools and build great applications. In Chrome 73, we made Linux containers available so that developers can access their favorite

Integrated Development Environments (IDE) and other tools they know and love. In this latest version, we’re introducing two additional features to make this experience even better:

Backup and restore. In the case that a developer would want to restore their Linux environment to a previous version, users can now backup their files and applications to a restorable image, which can be used on that machine or a different Chromebook. The image can be backed up to your Chromebook’s local storage, an external drive, or Google Drive.

GPU support on-by-default. Linux apps will now be able to use the Chromebooks’ Graphics Processing Unit (GPU) to provide a snappier, lower-latency experience for development applications.

Improving policy management with Atomic policy groups

With so many options for policy management, administrators are looking for ways to reduce potential policy conflicts, especially if admins of the same fleet are using multiple tools to manage that fleet, like group policy and the Google Admin console. To ensure predictable behavior from policies that are tightly related, some policies have been regrouped as “atomic policy groups” for Chrome Browser and Chrome OS. This means that if you choose to enable “atomic policy groups,” policies in a single group will all be forced to set their behavior based on the highest priority source. You can enable atomic policy groups using PolicyAtomicGroupsEnabled.

You can see if there are any conflicting policies from different sources at chrome://policy. Note: If you have multiple policies in the same policy group from different sources, they will be affected by this feature. Read about Atomic Policy Groups or check out this article on Chrome policy management to learn more.

Chrome OS and Chrome Browser settings split

Chrome has historically shared a single settings surface for both OS and browser configuration for users. However, from Chrome 78 on, we will be splitting Chrome OS and browser configurations into two. This allows the OS settings to be developed independently, while still providing the consistent, customizability Chrome Browser users have grown to expect.

Settings on Chrome OS will now have native OS settings housed in the “Settings” app (available via Launcher or when you click the settings icon in the Quick Settings menu). Chrome Browser settings will not change and can still be accessed in the familiar three-dot menu in the top right corner of the browser app.

Tip: Enterprise Admins that block Chrome Browser settings by URL (chrome://settings) might also want to block the new URL for Chrome OS settings (chrome://os-settings).

To stay in the know, bookmark this Help Center page that details new releases, or sign up to receive new release details as they become available.

The content below is taken from the original ( VMware Expands Reach of VMware Cloud on AWS for Cloud Providers and MSPs with VMware Cloud Director Service), to continue reading please visit the site. Remember to respect the Author & Copyright.

Today at VMworld 2019 Europe , VMware, Inc. announced a new multi-tenancy service for VMware Cloud on AWS that will make it even more attractive and… Read more at VMblog.com.

The content below is taken from the original ( The first US clinical trial of using an in-brain chip to fight opioid addiction is now underway), to continue reading please visit the site. Remember to respect the Author & Copyright.

Opioid addiction is easily one of the top widespread healthcare issues facing the U.S., and research indicates we’re nowhere near achieving any kind of significant mitigating solution. But a team of medical researchers working at the West Virginia University Rockefeller Neuroscience Institute (RNI) and West Virginia University Medicine (WVU) are beginning a new clinical trial of a solution that uses brain-embedded technology to potentially curb opioid addiction in cases that have resisted other methods of treatment. treatment

A team of neurosurgeons from RNI and WVU successfully implanted what’s called a “deep brain simulation” or DBS device ‘deep brain simulation’ or DBS devices into the brain of a 33-year-old 33-year old man, the first person to participate in the trial. The DBS device consists of a number of tiny electrodes, attached to specific parts of the brain that are known to be associated with addiction and self-control behaviors. The DBS behaviours. The DBI should, in theory, be able to curb addiction as related impulses are sent, and also monitor cravings in real time real-time in the patient, providing valuable data to researchers about what’s occurring in cases of treatment-resistant opioid addiction.

Opioid addiction resulted in as many as 49.6 deaths per 100,000 people in West Virginia in 2017, WVU notes. That’s the highest rate of opioid-related deaths death in the U.S. Other, less invasive treatment options are definitely available, including opioid alternatives that will provide pain relief to chronic sufferers like theone being developed by startup Coda Code . But for existing sufferers, and especially for the significant portion of opioid addiction patients for whom other treatments have not proven effective, a high-tech option like DBS might be the only viable course.

This RNI trial will initially include four participants, who have all undergone thorough courses of treatment across a number of programs and yet continue to suffer from addiction. addition. The team involved also has extensive experience working with DBS in FDA-approved treatment of other disorders, including epilepsy and obsessive-compulsive disorder. It’s definitely a last-resort approach, but if this clinical trial produces positive results, it could be an option to help the most serious of cases when all other options are exhausted.

The content below is taken from the original ( Partnering with Nutanix to run Windows and Linux desktop apps on GCP), to continue reading please visit the site. Remember to respect the Author & Copyright.

Google Cloud is extending its partnership with Nutanix with Xi Frame on Google Cloud Platform (GCP), now generally available. Frame provides a cloud-native, simple and powerful solution to run Windows or Linux remote desktop applications on any device. Enterprises can now run virtual workspaces on GCP and take advantage of the GCP’s flexible resources.

With Xi Frame and Google Cloud, customers only need a web browser to run remote applications from anywhere and on any device. With this solution, users can for example, run Xi Frame on Google end-to-end, from the underlying Google Cloud infrastructure (VM, storage, networking, etc.), to the end-user client with Chrome devices.

Deploying Xi Frame on GCP is fast and simple. Frame provides two options to deploy your digital workspace and applications: you can leverage a fully managed Desktop-as-a-Service where Frame manages all the infrastructure resources on your behalf, or you can manage GCP resources such as VM limits and custom networking yourself, and only pay Nutanix for the Xi Frame platform services that automate and orchestrate resources in your GCP account.

We’ve been working closely with Nutanix to integrate Frame with multiple Google Cloud services:

Google Cloud Platform: Support for GCP across all regions, with a variety of VM types to fit your workload needs, including GPUs

Google Chrome OS: Integration with Chromebooks and devices enables users to launch Windows applications directly from the ChromeOS shelf

Google Drive and File Stream: Help keep your files safe, secure and always available with Google Drive, including on shared drives

Google Cloud Identity: Sign in with Google to authenticate your users, and automatically connect to Google Drive after first sign in

“We are delighted to offer Frame on Google Cloud Platform. GCP’s security, price/performance and network backbone make it a great choice to run your remote desktop infrastructure,” said Nikola Bozinovic, VP/GM Desktop Services at Nutanix.

You can learn more at nutanix.com/frame-for-google and try Frame on GCP with a free 30-day trial with your existing GCP account.

The content below is taken from the original ( Untangle Extends the Network to the Edge with the Release of Untangle SD-WAN Router and New eSeries Appliances), to continue reading please visit the site. Remember to respect the Author & Copyright.

Untangle Inc. , a leader in comprehensive network security for small-to-medium businesses (SMBs) and distributed enterprises, today announced the… Read more at VMblog.com.

The content below is taken from the original ( Show off your BigQuery ML and Kaggle skills: Competition open now), to continue reading please visit the site. Remember to respect the Author & Copyright.

Part of the fun of using cloud and big data together is exploring and experimenting with all the tools and datasets that are now available. There are lots of public datasets, including those hosted on Google Cloud, available for exploration. You can choose your favorite environment and language to delve into those datasets to find new and interesting results. We’re excited to announce that our newest BigQuery ML competition, available on Kaggle, is open for you to show off your data analytics skills.

If you haven’t used Kaggle before, you’ll find a ready-to-use notebooks environment with a ton of community-published data and public code —more than 19,000 public datasetsand 200,000 notebooks. Since we recently announced integration with Kaggle kernels and BigQuery, you can now use BigQuery right from within Kaggle and take advantage of tools like BigQuery ML, which allows you to train and serve ML models right in BigQuery. BigQuery uses standard SQL queries, so it’s easy to get started if you haven’t used it before.

Green means go: getting started

Now, on to the competition details. You’ll find a dataset from Geotab as your starting point. We’re excited to partner with Geotab, which provides a variety of aggregate datasets gathered from commercial vehicle telematics devices. The dataset for this competition includes aggregate stopped vehicle information and intersection wait times, gathered from trip logging metrics from vehicles like semi-trucks. The data have been grouped by intersection, month, hour of day, direction driven through the intersection, and whether the day was on a weekend or not. Your task is to predict congestion based on an aggregate measure of stopping distance and waiting times at intersections in four major U.S. cities: Atlanta, Boston, Chicago and Philadelphia.

Geotab’s data serves as an intriguing basis for this challenge. “Cities have a wealth of data organically available. We’re excited about this competition because it truly puts a data scientist’s creativity to the test to pull in data from interesting external resources available to them,” says Mike Branch, vice president of data and analytics at Geotab. “This competition truly shows the democratization of AI to ease the accessibility of machine learning by positioning the power of ML alongside familiar SQL syntax. We hope that this inspires cities to see the art of the possible through data that is readily available to them to help drive insight into congestion.”

Take a look at the competition page for more information on how to make a submission.

Thinking big to win

But, you may be asking, how do I win this competition? We’d encourage you to think beyond the raw dataset provided to see what other elements can steer you toward success. There are lots of other external public datasets that may spark your imagination. What happens if you join weather or construction data? How about open maps datasets? What other Geotab datasets are available? Is there public city data that you can mine?

You can also find some useful resources here as you’re getting started:

Along with bragging rights, you can win GCP coupon awards with your submission in two categories: BigQuery ML models built in SQL or BigQuery ML models built in TensorFlow. To get started with BigQuery if you’re not using it already, try the BigQuery sandbox option for 10GB of free storage, 1 terabyte per month of query processing, and 10GB of BigQuery ML model creation queries.

You can get all the submission file guidelines and deadlines on the competition page, plus a discussion forum and leaderboard. You’ve got till the end of the year to submit your idea. Have fun, and make sure to show off your skills when you’re done!

The content below is taken from the original ( How Microsoft Uses Machine Learning to Improve Windows 10 Update Experience), to continue reading please visit the site. Remember to respect the Author & Copyright.

Microsoft started using machine learning (ML) to manage the rollout of Windows 10 feature updates with the Windows 10 April 2018 Update (version 1803). In a new blog post by Microsoft’s Archana Ramesh and Michael Stephenson, both data scientists for Microsoft Cloud and AI, the company outlines improvements made since then.

Microsoft has been having a tough time recently with the quality of cumulative updates (CU) and feature updates for Windows 10. While the tech media tends to blow things out of proportion sometimes, I think it’s fair to say that quality has taken a knock since internal testers were dismissed in favor of the Windows Insider Program. Biannual feature updates haven’t been without their issues either. But because of the diversity of the Windows ecosystem, regardless of how much testing is done, there is always the potential for issues when making changes to a complex piece of software like Windows.

But if you are a large or medium sized organization that manages updates using Windows Server Update Services (WSUS), Microsoft System Center Configuration Manager (SCCM), or other product, you can do your own testing and don’t need to rely on Microsoft’s automated rollout. Smaller organizations can use Windows Update for Business, which is a series of Group Policy and Mobile Device Management (MDM) settings that give somewhat limited control over when CUs and feature updates are installed. Individuals and businesses without IT support rely on Microsoft to determine when feature updates should be installed. Although ‘seekers’, i.e. people who go into Windows Update and click Check for updates, might be offered feature updates before they are designated OK for the device. If Microsoft finds any serious problems related to hardware or software, blocks (safeguard holds) are placed on the update for affected devices until the issues have been resolved.

Microsoft used ML to evaluate 35 areas of PC health for determining whether devices were ready for the May 2019 Update. It says that PCs designated to be updated by ML have a much better update experience, with half the number of system-initiated rollbacks, kernel mode crashes, and five times fewer driver issues. Microsoft designed a ML model that is dynamically trained on the most recent set of PCs that have been updated, and that can tell the difference between PCs having a good and bad update experience. ML can identify issues that let Microsoft create safeguard holds to prevent updates being installed on PCs that might have a poor update experience. And ML predicts and nominates devices that should have a smooth update experience. As more PCs receive a feature update, the ML model learns from the most recent dataset. And as Microsoft resolves identified issues, PCs that might have had a poor update experience are offered the update.

Training data for the model is collected from the latest set of PCs on the feature update, and their configuration (hardware, apps, drivers, etc.) at the time the update was installed. A binary label is also assigned to determine whether the device had a good update experience based on indicators like whether there was a system-initiated uninstall and reliability score after the update. Azure Databricks is used to build the model and ensure only high-quality data is used to train it. Anomaly detection is used to identify when a feature, or two or more features, causes a higher failure rate is present in the rest of the data. Along with lab tests, feedback, and support calls, this data is used to establish safehold guards.

Because the Windows ecosystem is so diverse and the ML model is refreshed daily, Microsoft needs to make sure it has data that represents similar devices to those that still must be updated. A technique called saturation is used to determine how many of the diverse systems are currently represented in the dataset. This helps Microsoft understand how a feature update has penetrated the Windows ecosystem. ML is only used to offer updates to systems if Microsoft has seen a fair number of similar configurations in its data. At the end of the day, Insiders and ‘seekers’ are important so that enough relevant data can be gathered to inform the ML model on the widest variety of device configurations in the ecosystem.

How Microsoft Uses Machine Learning to Improve Windows 10 Update Experience (Image Credit: Microsoft)

How Microsoft Uses Machine Learning to Improve Windows 10 Update Experience (Image Credit: Microsoft)It’s still early days but ML does seem to be improving the success rate of good update experiences. Whether we like Microsoft’s Software-as-a-Service approach to Windows 10 and lack of internal quality testers is another question. Organizations with the right resources should always pilot new Windows 10 feature updates on a variety of different device configurations to establish whether they update smoothly and result in any functional issues after the upgrade that could affect line-of-business operations. Machine learning can’t replace real-world testing. At least not yet.

For a more technical deep dive into how ML is used for Windows 10 feature updates, see Microsoft’s blog post here.

The post How Microsoft Uses Machine Learning to Improve Windows 10 Update Experience appeared first on Petri.

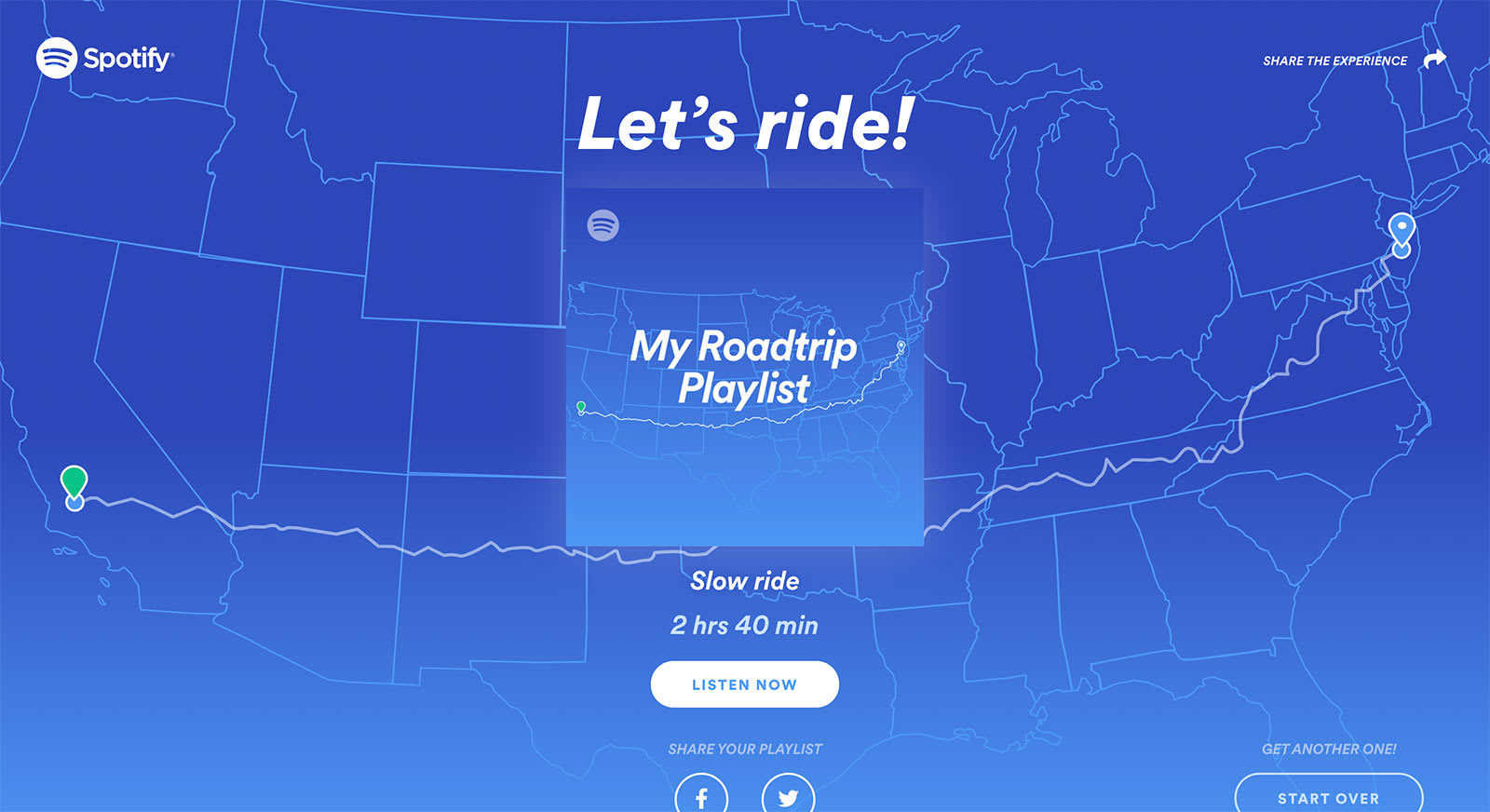

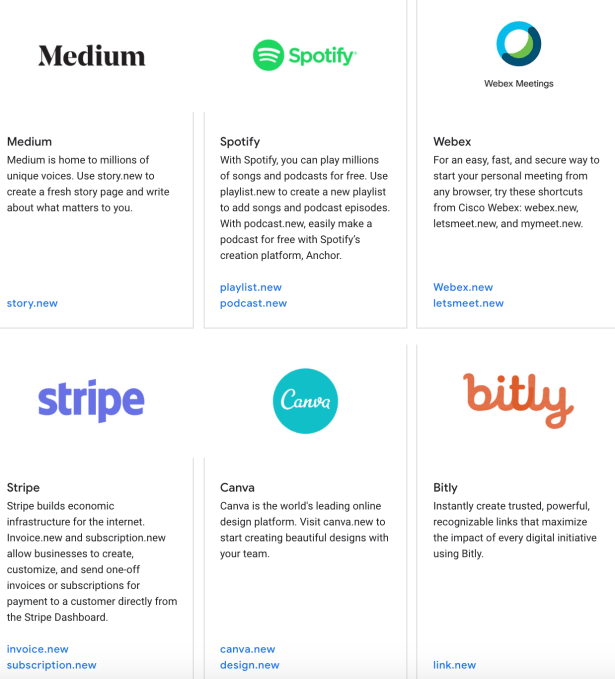

The content below is taken from the original ( Google brings its ‘.new’ domains to the rest of the web, including to Spotify, Microsoft & others), to continue reading please visit the site. Remember to respect the Author & Copyright.

A year ago, Google rolled out “.new” links that worked like shortcuts to instantly create new Google documents. For example, you could type “doc.new” (without the quotes) to create a new Google Doc or “sheet.new” to create a new spreadsheet. Today, Google announced it’s is bringing the .new shortcuts to the rest of the web. Now, any company or organization can register their own .new domain to generate a .new shortcut that works with their own web app. Several have already done so, including Microsoft, which now has “word.new” to start a new word document, or Spotify, which who has “playlist.new” to start adding songs to a new playlist on its streaming app.

The domains are designed to get users straight to the action. That is, instead of having to visit a service, sign in, then find the right menu or function, they could just start creating.

The domains are designed to get users straight to the action. That is, instead of having to visit a service, sign in, then find the right menu or function, they could just start creating.

However, some of today’s new domains aren’t quite as seamless as Google’s own. Because most Google Docs users are already signed in into to Google, it’s easy to jump right to creating a new online document.

But other services aren’t used as often. That means Medium’s “story.new” doesn’t let you immediately start writing your blog post, unless you’re already signed in to Medium. If not, it drops you on a “Join Medium” sign-up page instead. This doesn’t make it necessarily any easier to use Medium — a better use case would have allowed the user to just start writing, saving their text under a temporary account, then prompt them to join Medium upon exit or clicking “publish.”

Meanwhile, Microsoft’s word.new, Word.new, a direct rival to Google’s own doc.new, isn’t yet resolving. (Google tells us some of the shortcuts aren’t yet ready, but should be soon.)

Other participants include sell.new (eBay), canva.new and design.new (Canva), reservation.new (OpenTable), webex.new and letsmeet.new (Cisco WebEx meeting room creation), link.new (Bit.ly), invoice.new (Stripe’s dashboard), api.new (launch new Node.js API endpoints from RunKit), Coda.new (Coda), music.new (create personalized song artwork for OVO Sound artist releases and more) and repo.new (GitHub). more), and repo.new (GitHub.)

Spotify also picked up podcast.new, which takes you to Anchor, in addition to playlist.new.

Considering the lineup, it’s clear that there’s not as much of a gold rush for these action-based domains as there they are for other top-level domains. There are some fun use cases, like Spotify’s, and practical ones like word.new, Word.new, but others are less compelling because they drop you on sign-up/sign-in pages because sign up/sign in pages as they’re not everyday services.

And some are just odd land grabs. Like Ovo Sound, the record label founded by Drake, which snagged “music.new” — a domain that you would think would go to a larger streaming service. (In fact, it’s somewhat surprising that Google’s own music service, YouTube Music, didn’t grab that one for itself.)

Google says any company can register these domains, all of which are secured over HTTPS connections like .app, .page .page., and .dev domains are.

Through January 14, 2020, trademark owners can register their trademarked .new domains. Starting December 2, 2019, anyone can apply for a .new domain during the Limited Registration Period.

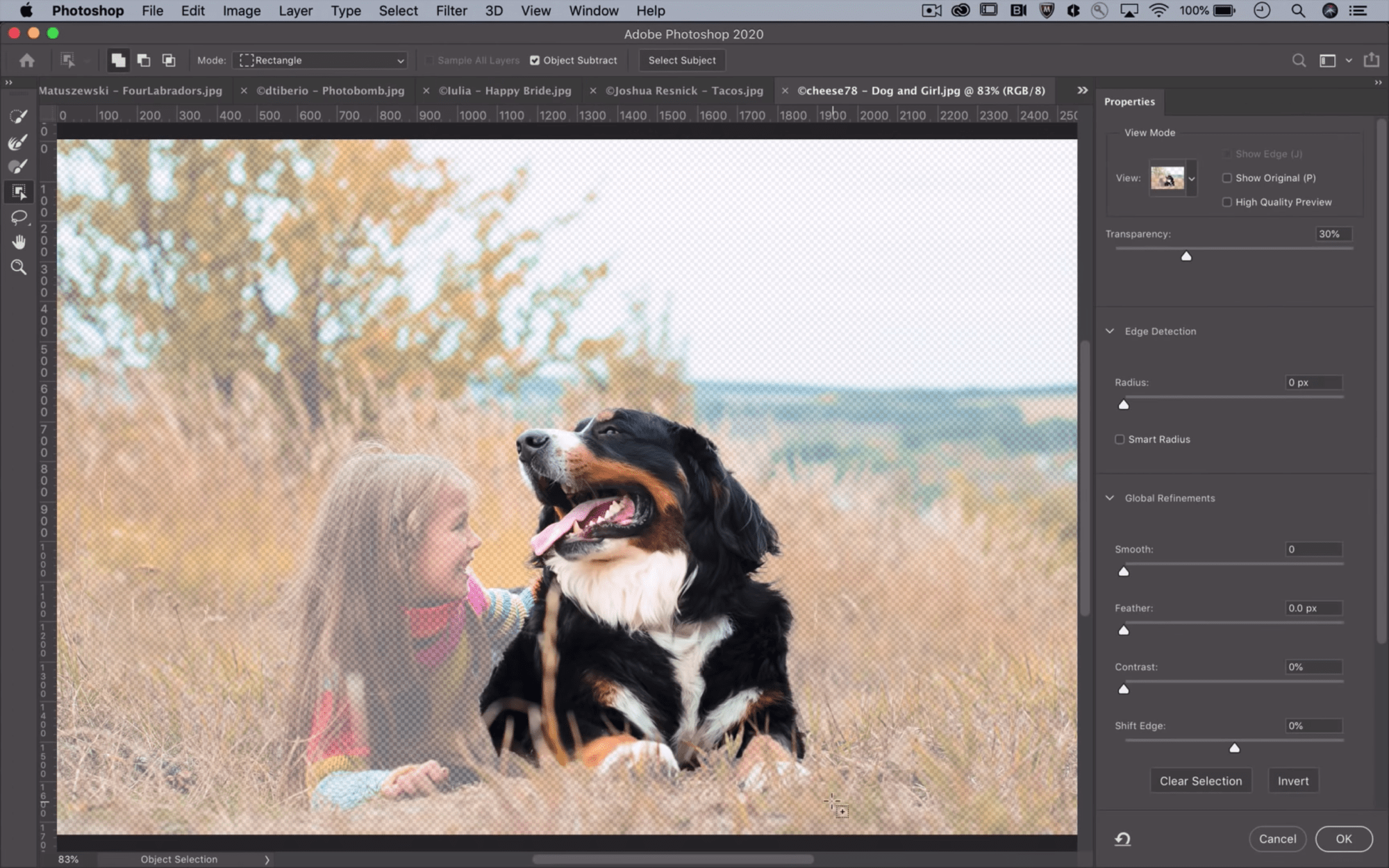

The content below is taken from the original ( Photoshop’s latest AI-powered tool makes quick work of selections), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Microsoft welcomes ancient Project app to the 365 family, meaning bleak future for on-prem), to continue reading please visit the site. Remember to respect the Author & Copyright.

Microsoft has rolled out the cloud-based version of its venerable project management software, Project, along with a new basic subscription option.…