HPE lab boffins have finally, after years of work, built a proof-of-concept prototype of their fairytale memory-focused computer, The Machine. The achievement is bittersweet.

A decision has been made within Hewlett Packard Enterprise management to step back from manufacturing and selling the much-hyped fabled device as a complete system. Announced in mid-2014 as an alleged revolution in modern computing, The Machine will not emerge from the labs as an official product any time soon. Instead, it will be cannibalized and its technologies sprinkled into HPE products over the remainder of the decade and possibly beyond.

The prototype Machine nodes are, as we described earlier, powered by Linux-running system-on-chips that have their own private DRAM and interact with 2 to 4TB of on-board persistent memory via a silicon photonics switch and fabric. The processors can also access persistent memory on other nodes across the photonics fabric, thus forming a giant pool of byte-addressable storage. The CPU architecture was not disclosed, but it could be 64-bit ARMv8-A or Intel x86.

The Machine nodes are deeper than a standard rack-mounted box by about 12-inches: they extend six inches beyond the rear of the rack cabinet and project six inches in front of it. They are mounted vertically, being about 4U high, and sit on a suitably-sized shelf.

However, don’t get excited. This may be the last time we see them.

We do know that the exotic hardware’s persistent memory subsystem will survive in some form. Here are HPE’s plans for this technology:

- Right now: ProLiant boxes with persistent memory for applications to use, using a mix of DRAM and flash.

- 2016 – 2017: Improved DRAM-based persistent memory.

- 2018 – 2019: True non-volatile memory (NVM) for software to use as slow-but-copious RAM.

- 2020 and onwards: NVM technology used across multiple product categories.

Roadmap … We snapped this slide at a HPE presentation on Monday about the fate of The Machine

The fourth point reflects the fact that The Machine project is no longer interested in delivering a product called The Machine for a long, long while, if ever. Instead Machine concepts will seep into existing and new systems, we’re told.

You can’t touch this (ever) … The Machine’s long and thin prototype node design

According to a slide we glimpsed at an HPE Discover briefing in London on Monday:

The Machine is a dramatic architecture change that will continue into the next decade.

[The] goal is to demonstrate progress, not develop products. At each stage, for a compelling use case, we will be able to demonstrate that the combination of SoC, memory semantic photonic fabrics and massive memristor-based NVM pools with corresponding changes to operating systems will put us on a new S-Curve.

HP Labs and Business Units will collaborate to deliver differentiating Machine value into existing architectures as well as disruptive architectures.

HPE believes today’s computers are buckling, or going to buckle, under the massive amounts of data we’re all generating and consuming, and are struggling with memory limitations. A new architecture is needed, we’re told, a memory-driven architecture to reignite growth in computing power.

Hence HPE’s obsession with a new computer architecture that has large amounts of reasonably fast persistent memory as the centerpiece, providing tracts of storage for applications to access directly at high speed to manipulate data. We’re assured this technology is going to trickle into shipping boxes as the IT giant’s product teams embrace Machine concepts, such as silicon-photonics-accessed persistent memory fabrics.

We note that HPE’s Synergy composable compute and storage product has been engineered to take a silicon photonics backplane.

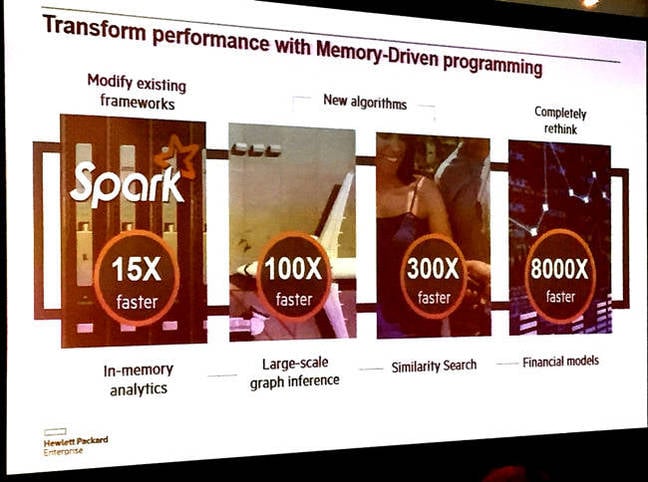

HPE is still enthused about the performance benefits of memory-driven computing, showing us this slide:

Machine technologies performance gains … The 8,000X performance gain for financial apps at the far right of this slide is obviously theoretical

We were told that HPE and Western Digital have a strong continuing relationship, with WD-owned SanDisk developing Resistive RAM (ReRAM) for use in HPE’s memory-driven computing. We believe this particular technology will arrive as ReRAM non-volatile DIMMs in HPE kit some time in 2018 to 2019.

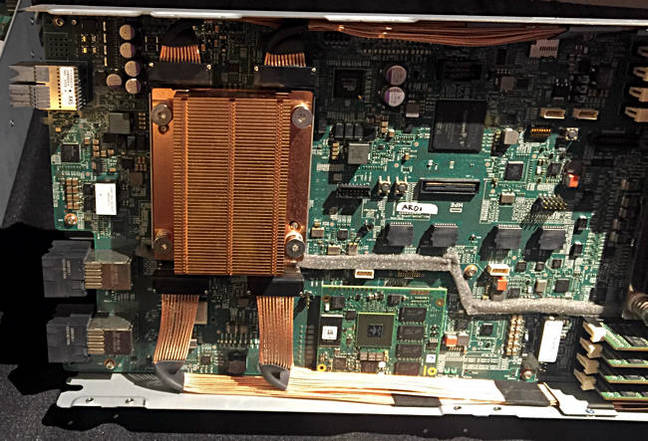

DIMM view … A Machine node prototype’s fabric-attached memory

HPE said its partnership with SK Hynix to develop memristor storage ran into funding and directional problems. SK Hynix’s prime business is making traditional RAM and flash chips and that took the main share of its investment budget, leaving insufficient cash to develop memristor technology aggressively – technology that would, in our view, affect its DRAM revenues.

Western Digital’s spokespeople would not reveal any details on WD’s ReRAM developments, beyond saying they were happy with its progress and that it had good process scalability potential which, they delicately hinted, may not be true for alternative persistent memory technologies, such as XPoint. We were assured that WD’s engineers have good 3D NAND skills and the organisation has a lot of foundry capacity – both necessary to build and develop a viable persistent memory technology product.

Is memristor development dead? The memristor technology was mentioned on a slide or two in HPE’s Machine briefing but the SK Hynix partnership has faded and WD is focussed on ReRAM, not memristor. If we’re being very charitable, we’d say memristors are on the back burner.

A Machine node prototype’s system-on-chip

HPE is relying on open-source developers to create applications optimized for memory-driven computing – stuff like the SAP HANA in-memory database, Hadoop-style software, and real-time big data analytics.

For these users of massive amounts of unstructured data, the idea of having it all in DRAM at once is untenable, but having it all in cheaper, photonics-fabric-accessed persistent memory is the dream HPE is clinging onto.

The Machine product is all but dead but The Machine component technology developments are, HPE tells us, vibrant, ongoing, being energetically pursued, and will be inserted into shipping gear. Eventually. Soon. Fingers crossed. ®

Sponsored:

10 Reasons LinuxONE is the best choice for Linux workloads