The content below is taken from the original ( Retire your tech debt: Move vSphere 5.5+ to Google Cloud VMware Engine), to continue reading please visit the site. Remember to respect the Author & Copyright.

It can happen so easily. You get a little behind on your payments. Then you start falling farther and farther behind until it becomes almost impossible to dig yourself out of debt. Tech debt, that is.

IT incurs a lot of tech debt when it comes to keeping up infrastructure; most IT departments are already running as lean as they possibly can. Many VMware shops are in a particularly tough spot, especially if they’re still running on vSphere 5.5. If that describes you, it’s time to ask yourself how you intend to get out of this tech debt? General support for vSphere 5.5 ended back in September 2018, and technical guidance one year later. General support for 6.0 ended in March 2020, support for 6.5 ends November 15 of this year, and even the end of general support for vSphere 6.7 is only a couple of years away (November, 2022)! If you’re still running vSphere 5.5, moving to vSphere 7.0 is the right thing to do.

But doing so is hard if you’ve fallen into a deep tech-debt hole.Traditionally, it means moving all your outdated vSphere systems through all the interim releases until you’ve migrated all your systems to the latest version. That involves upgrading hardware, software, and licenses, as well as all the additional work that goes along with the upgrades. Then, as soon as you’re done, the next upgrade cycle is already upon you. Making the task even more daunting, VMware HCX—the company’s application mobility service—will also stop supporting 5.5 soon, making migration even more complicated.

If this paints an unsightly picture, don’t despair. You have the opportunity, right now, to easily retire your technical debt and be debt-free from here on out by migrating to Google Cloud VMware Engine. And you can migrate before you have to upgrade to the next vSphere release just to get migration support. Not only will you still be able to migrate to vSphere 7 using HCX, but even better, you don’t have to do the digging yourself.

The cloud breaks the cycle of debt

If the effort and resources required to move was too steep a price before, now it’s a viable option with Google Cloud VMware Engine. With cloud-based infrastructure, you can not only migrate to the latest release of vSphere, but you can also take your workload—lock, stock, and barrel—out of your data center and put it into Google Cloud. Moving to Google Cloud VMware Engine makes the migration task fast and simple. Never again will you have to deal with spreadsheets to track how many watts of cooling you need for your data center, buy additional equipment, or manage upgrades.

Migrating to the cloud is also the first step toward getting out of the business of managing your data center and into embracing an OpEx subscription model. And you can begin moving workloads to the cloud in increments, without having to worry about all the nuances — it’s all done for you.

Work in a familiar environment and expand your toolset

One of the biggest benefits of Google Cloud VMware Engine is that it offers the same, familiar VMware experience you have now. All the applications running on vSphere 5.5 can immediately run on a private cloud in Google Cloud VMware Engine with no changes. You’ll now be running on the latest release of vSphere 7, and when VMware releases patches, updates, and upgrades, Google Cloud keeps the infrastructure up to date for you. And as a VMware administrator, you can use the same tools that you’re familiar with on-premises.

Migration doesn’t have to be a long, arduous process

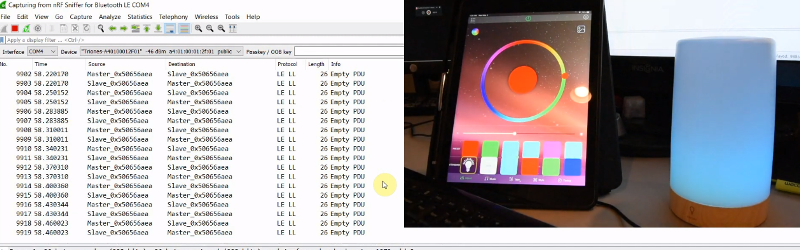

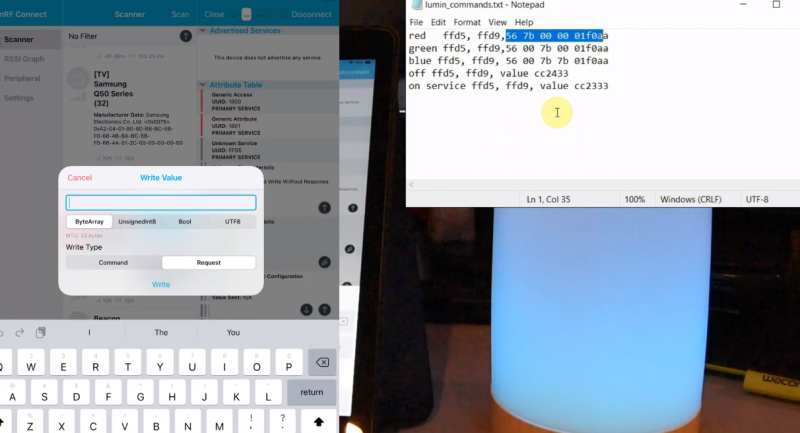

Google Cloud VMware Engine allows you to leverage your existing virtualized infrastructure to make migration fast and easy. Use familiar VMware tools to migrate your on-premises vSphere applications to vSphere in your own private cloud while maintaining continuity with all your existing tools, policies, and processes. It takes only a few clicks (see our demo video). Make sure you have your prerequisites, enable the Google Cloud VMware Engine API, and follow these 10 steps:

-

Enable the VMware Engine node quota and assign at least three nodes to create your private cloud.

-

Set your roles and permissions.

-

Access the Google Cloud VMware Engine portal.

-

Click ‘Create a private cloud’. This is fast — only about 30 minutes.

-

Select the number of nodes (a minimum of three).

-

Enter a CIDR range for the VMware management network.

-

Enter a CIDR range for the HCX deployment network.

-

Review your settings.

-

Click Create.

-

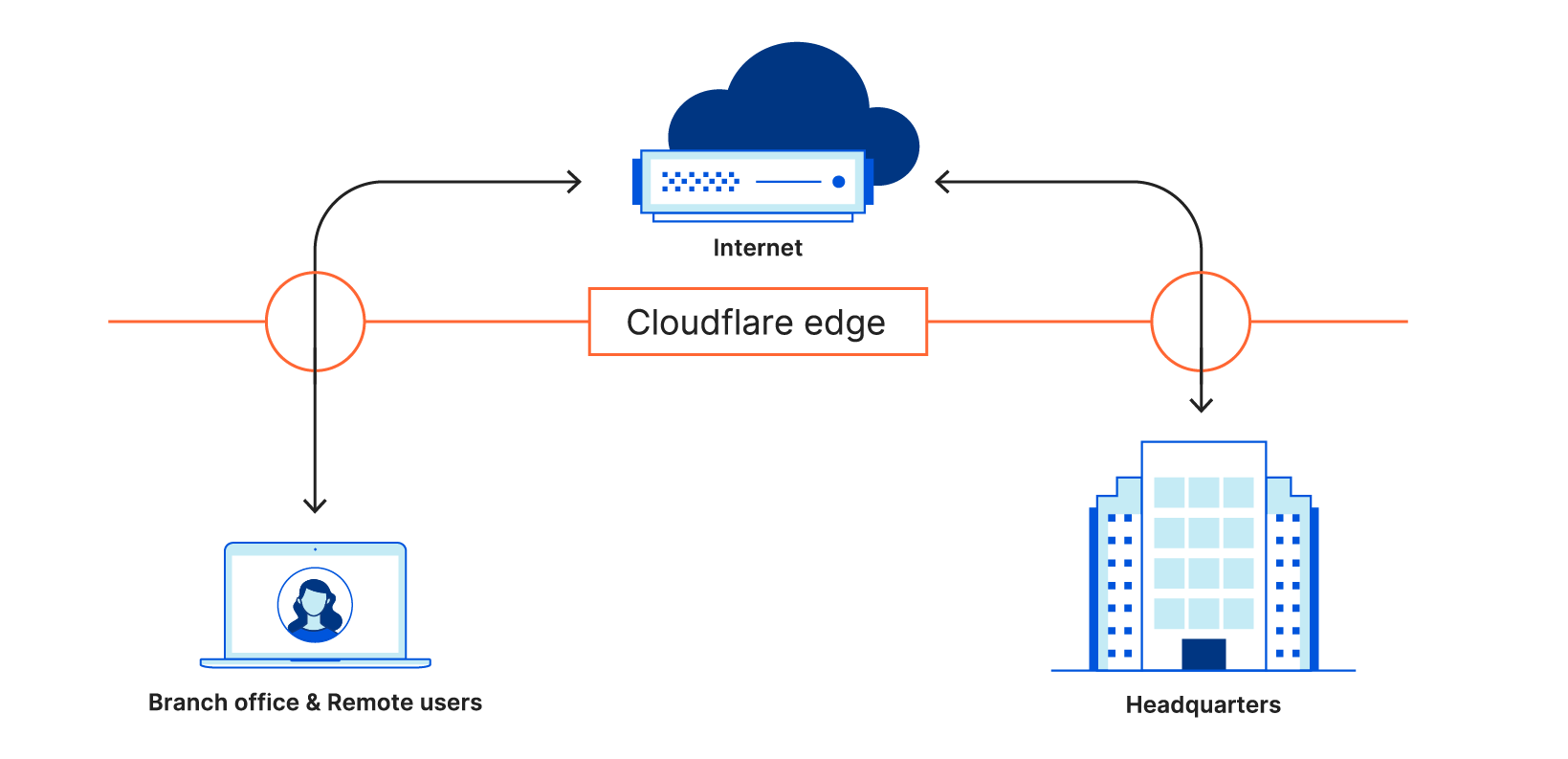

Connect an on-prem network to your VMware Engine private cloud or connect using a point-to-site VPN connection. Google Cloud VMware Engine supports multi-region networking with VPC global routing, which allows VPC subnets to be deployed in any region worldwide, greatly simplifying networking.

When you use VMware HCX to migrate VMs from your on-premises environment to Google Cloud VMware Engine, VMware HCX abstracts vSphere resources running both on-prem and in the cloud and presents them to applications as one continuous resource to create a hybrid infrastructure.

By partnering with Google Cloud, you can erase your tech debt and get out of the time-consuming, resource-draining business of data center management. Then, once your VMware-based workloads are running on Google Cloud VMware Engine, you can start modernizing your applications with Google Cloud services, including AI/ML, low-cost storage, and disaster recovery solutions. Check out the variety of pricing options for the service, from pre-pay with discounts up to 50% to pay-as-you-go and annual commitments.

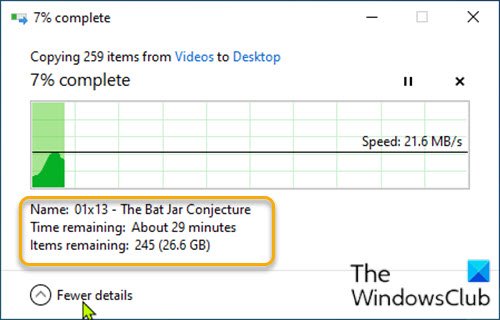

By default, when you initiate a file operation, which is basically Copy/Cut/Move/Paste or Delete, […]

By default, when you initiate a file operation, which is basically Copy/Cut/Move/Paste or Delete, […]